Varigence Blog

Dev Diary – Defining Mapping Data Flow Parameters with Biml

The previous post on developing Delta Lake using Azure Data Factory Mapping Data Flows covered how and why we use parameters in our BimlFlex-generated Mapping Data Flow data logistics processes.

As a brief recap, parameters for a Mapping Data Flow can both inherit values from the process that executes it (the Execute Pipeline) as well as hold values that can be used to define the data flow itself.

For BimlFlex this means the Mapping Data Flows can integrate with the BimlCatalog, the data logistics control framework, and provide a central access to important information received from the metadata framework. For example, parameters contain the composition of the Integration Key and the names of standard fields as they have been configured in the BimlFlex App.

Adding Parameters using Biml

The code snippet below uses the example from the previous post on this topic and adds a number of parameters to the Mapping Data Flow. Once defined, these become accessible to the Execute Pipeline that calls the Mapping Data Flow so that information can be passed on.

The first four parameters of this example reflect this behaviour. The start time of the individual process and its batch (if there is one) are passed to the Mapping Data Flow so that these values can be added to the resulting data set.

The other parameters are examples of attributing both the name of custom columns as well as their default values. This is really useful to dynamically add columns to your data flow.

This approach allows any custom or standard configuration to make its way to the generated data logistics process.

As a final thing to note, some parameters are defined as an array of strings by using the string[] data type. Mapping Data Flows has a variety of functionality that allows easy use of an array of values. This is especially helpful when working with areas where combinations of values are required, such as for instance calculation of full row hash values / checksums or managing composite keys. Future posts will go into this concept in more detail.

For now, let's have a look at the syntax for defining parameters in Mapping Data Flows - using the Parameters segment.

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<DataFactory Name="bfx-dev-deltalake-demo">

<Dataflows>

<MappingDataflow Name="HelloWorld">

<Sources>

<InlineAzureSqlDataset

Name="HelloWorldExampleSource"

LinkedServiceName="ExampleSourceLS"

AllowSchemaDrift="false"

ValidateSchema="false" />

</Sources>

<Sinks>

<InlineAzureSqlDataset

Name="HelloWorldExampleTarget"

LinkedServiceName="ExampleTargetLS"

SkipDuplicateInputColumns="true"

SkipDuplicateOutputColumns="false"

InputStreamName="bla.Output" />

</Sinks>

<Parameters>

<Parameter Name="BatchExecutionId" DataType="integer">-1</Parameter>

<Parameter Name="BatchStartTime" DataType="string">'1900-01-01'</Parameter>

<Parameter Name="RowAuditId" DataType="integer">-1</Parameter>

<Parameter Name="ProcessStartTime" DataType="string">'1900-01-01'</Parameter>

<Parameter Name="EffectiveFromDateAttributeName" DataType="string">'FlexRowEffectiveFromDate'</Parameter>

<Parameter Name="RowRecordSourceAttributeName" DataType="string">'FlexRowRecordSource'</Parameter>

<Parameter Name="RowHashAttributeName" DataType="string">'FlexRowHash'</Parameter>

<Parameter Name="RowSandingValue" DataType="string">'~'</Parameter>

<Parameter Name="RowSourceIdAttributeName" DataType="string">'FlexRowSourceId'</Parameter>

<Parameter Name="RowRecordSource" DataType="string">'awlt'</Parameter>

<Parameter Name="SourceKeyAccount" DataType="string[]">['AccountCodeAlternateKey']</Parameter>

<Parameter Name="SourceColumnsAccount" DataType="string[]">['AccountDescription','AccountType','Operator','CustomMembers','ValueType','CustomMemberOptions']</Parameter>

<Parameter Name="SchemaNameSource" DataType="string">'dbo'</Parameter>

<Parameter Name="TableNameSource" DataType="string">'Account'</Parameter>

</Parameters>

</MappingDataflow>

</Dataflows>

<LinkedServices>

<AzureSqlDatabase Name="ExampleSourceLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

<AzureSqlDatabase Name="ExampleTargetLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

</LinkedServices>

</DataFactory>

</DataFactories>

</Biml>

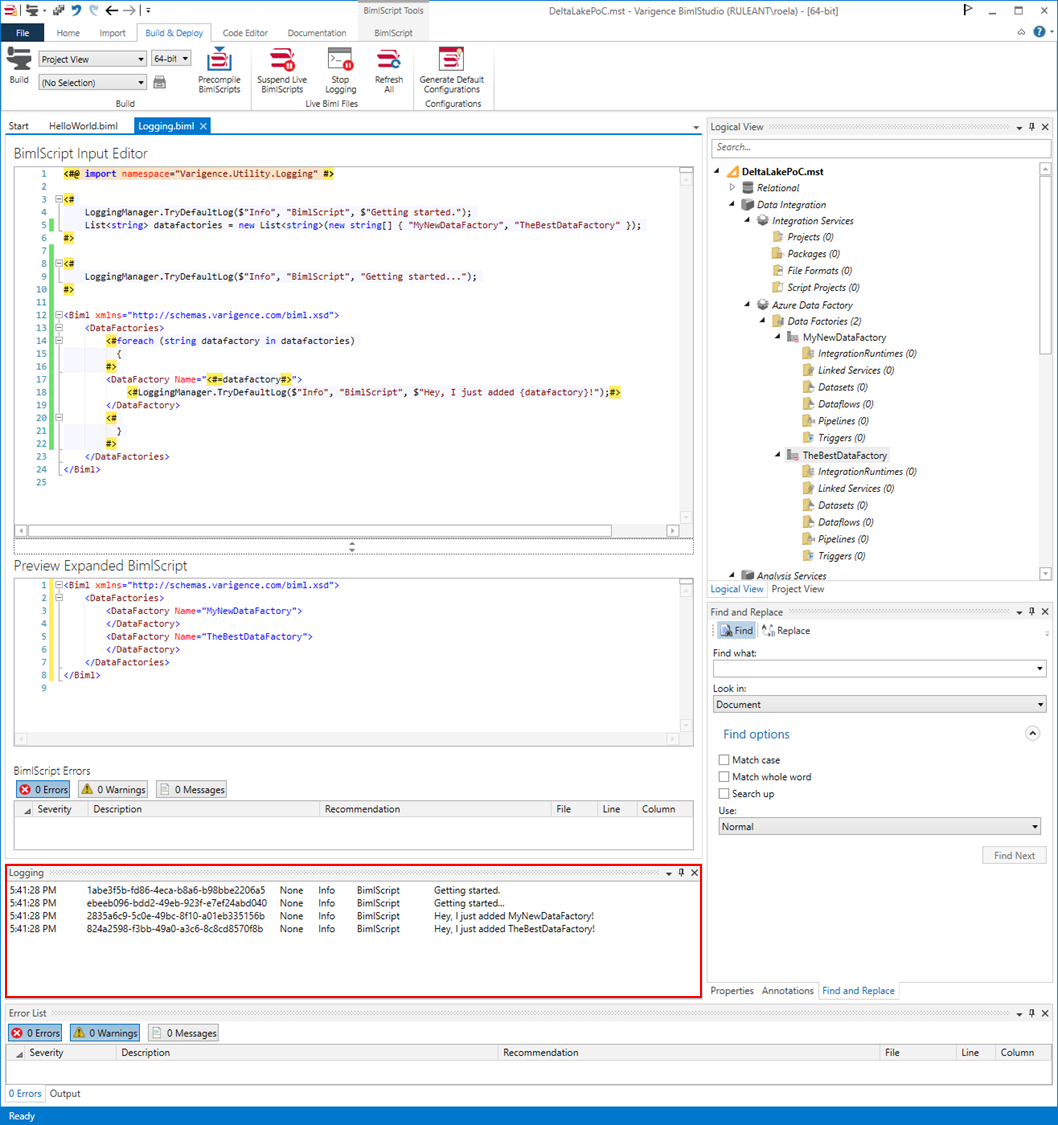

Using built-in logging for Biml

When working with Biml in any situation, be it using BimlExpress, BimlStudio or BimlFlex, it can be helpful to peek into what is happening in the background.

Some of you would have developed your own metadata framework that provides information to generate Biml, created a solution in BimlStudio or added extensions for BimlFlex. In all cases, it is possible to add logging to your solution and this is already natively available in BimlScript.

There is no need to create your own logging framework if the Biml logging already meets your needs, and it covers most common scenarios. The standard logging can write to a text buffer, an object such as a list, a file or it can trigger an event.

Working with logging is covered in detail in The Biml Book, and it is worth having a look especially if you are using BimlExpress.

But it is also possible to add logging to BimlStudio right away with just a few lines of code, with the immediate benefit that the log messages appear in the logging pane.

Make sure you enable the logging function in BimlStudio for this. This can be found in the ‘Build & Deploy’ menu.

To get started, add the Varigence Utility Logging reference to you script, and give the below code a try. This simple example creates a List of string values that are meant to be used to generate DataFactory Biml.

For each iteration, a log is written to the logging pane.

<#@ import namespace="Varigence.Utility.Logging" #>

<#

LoggingManager.TryDefaultLog($"Info", "BimlScript", $"Getting started.");

List<string> datafactories = new List<string>(new string[] { "MyNewDataFactory", "TheBestDataFactory" });

LoggingManager.TryDefaultLog($"Info", "BimlScript", "Getting started...");

#>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<#

foreach (string datafactory in datafactories)

{

#>

<DataFactory Name="<#=datafactory#>">

<#LoggingManager.TryDefaultLog($"Info", "BimlScript", $"Hey, I just added {datafactory}!");#>

</DataFactory>

<#

}

#>

</DataFactories>

</Biml>

The result looks like the screenshot below. Happy logging!

Dev Diary - Using Mapping Data Flow Parameters to dynamically use BimlFlex metadata

When using Mapping Data Flows for Azure Data Factory with inline datasets, the schema can be evaluated at runtime. This also paves the way to use of one of the key features this approach enables, which is support for schema drift.

Schema drift allows to evaluate or infer changes made to the schema (data structure) that is read from or written to. Without going into too much detail on this for now, the key message is that the schema is not required to be known in advance and can even change over time.

This needs to be considered for the new Data Flow Mapping patterns for BimlFlex. The Data Flow Mappings may read from multiple sources of different technology and write to an equal variety of targets (sinks), and some have stronger support for defining the schema than others.

Moreover, various components that may be used in the Data Flow Mapping require a known or defined column to function. This is to say that a column needs to be available to use in many transformations; in many cases this is mandatory.

The approach we are pioneering with BimlFlex is to make sure the generated patterns / Data Flow Mappings are made sufficiently dynamic by design by using Data Flow Mapping Parameters, so that we don't need to strictly define the schema upfront. The BimlFlex generated output for Data Flow Mappings intends to strike a balance between creating fully dynamic patterns that receive their metadata from BimlFlex, and allowing injecting custom logic using Extension Points.

Using Data Flow Mapping Parameters goes a long way in making this possible.

Using parameters, various essential columns can be predefined both in terms of name as well as value. This information can be used in the Data Flow Mapping using the dynamic content features provided.

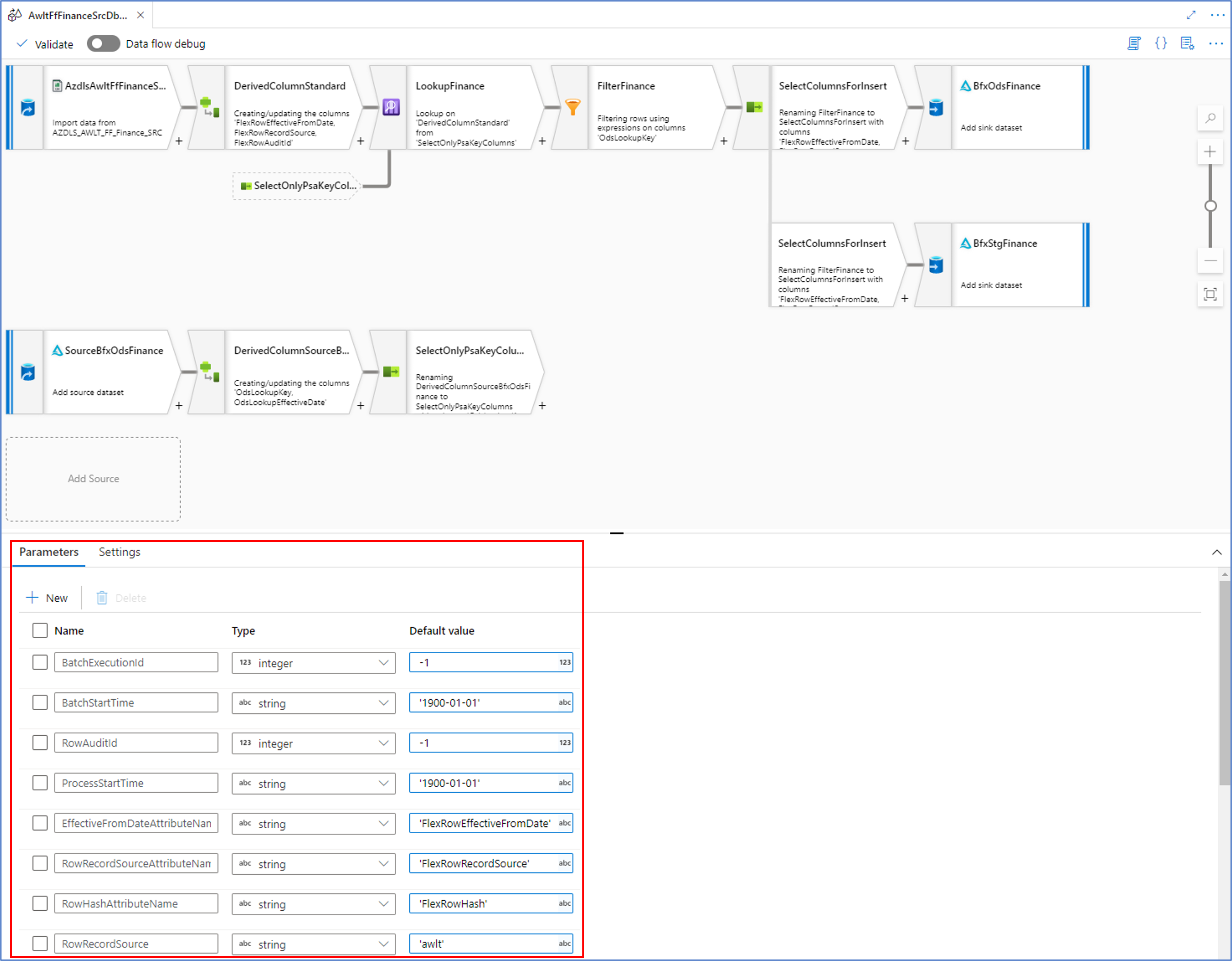

Consider the following screenshot of one of the generated output that loads new data form file into a Delta Lake staging area:

In our WIP approach, BimlFlex generates the essential metadata as parameters which are visible by clicking anywhere on the Data Flow Mapping canvas outside of the visible components.

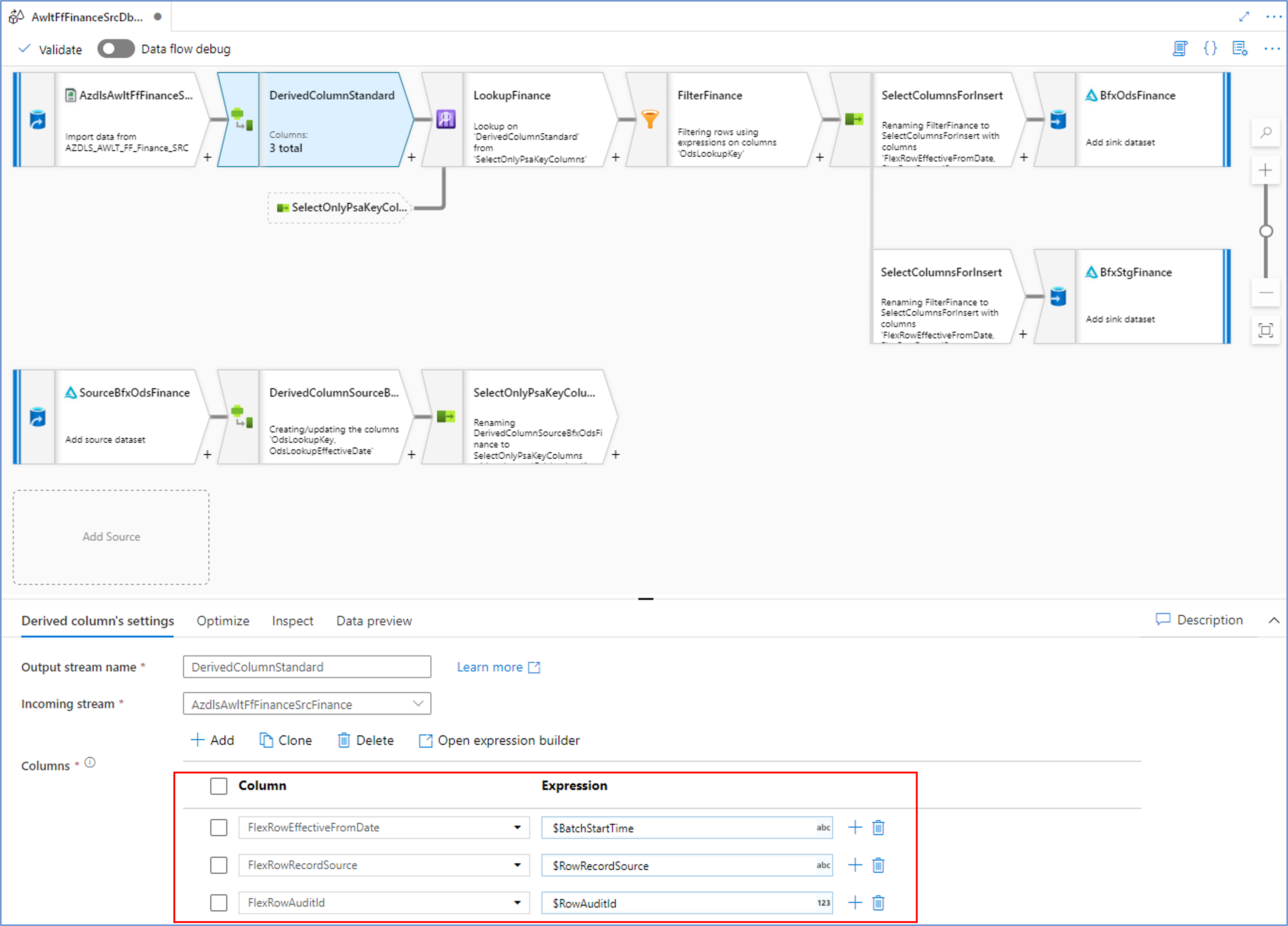

As an example, the ‘RowRecordSource’ parameter contains the name of the Record Source value from the BimlFlex metadata. This can be used directly in transformation such as in the Derived Column transformation below:

This means that we can use the names of the necessary columns as placeholders in subsequent transformations and their value will be evaluated at runtime. This can also be used to dynamically set column names, if required.

In the next posts, we’ll look at the Biml syntax behind this approach for the various transformations / components used.

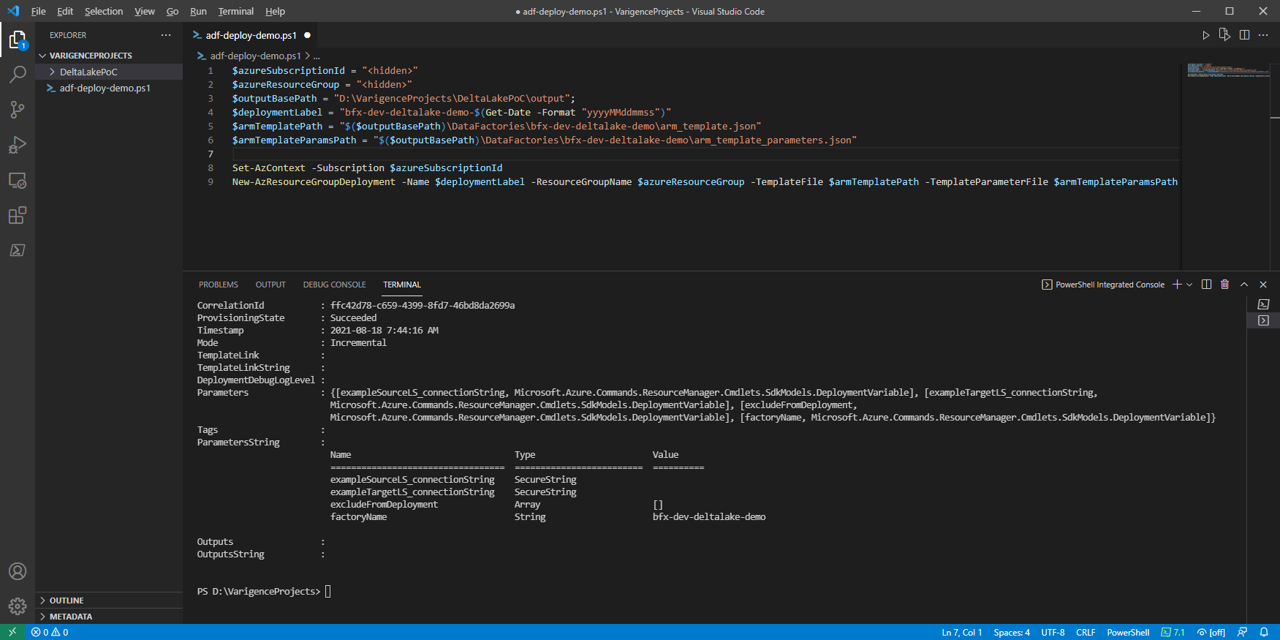

Dev Diary - Deploying Biml-Generated ADF Data Flow Mappings

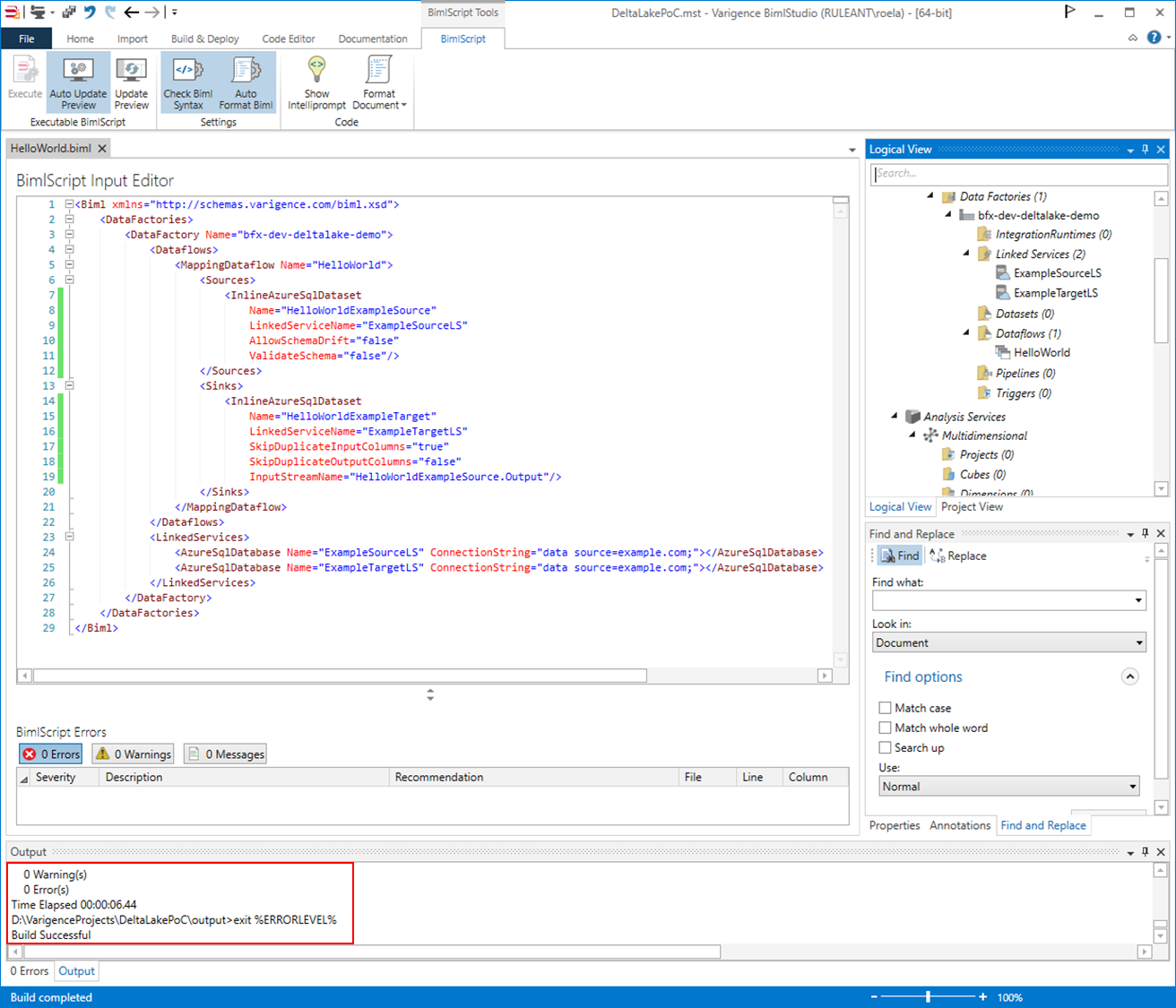

In the previous post on defining Data Flow Mappings for Azure Data Factory using Biml, we looked at a simple Biml script to define a Data Flow Mapping.

You may have noticed that the solution was 'built' in BimlStudio, at the bottom of the screenshot.

The build process has successfully created the necessary Azure Data Factory artefacts to create the intended Data Flow Mapping on Azure. BimlStudio has placed these in the designated output directory.

Biml and BimlStudio have the capability to generate various artefacts including Data Definition Language (DDL) files but it is the Azure Resource Manager (ARM) templates that we are interested in for now.

BimlStudio creates an arm_template.json file and a corresponding arm_template_parameters.json file that contain everything needed to deploy the solution to Azure. For large solutions, the files will be split into multiple smaller related files to ensure deployments without errors that may happen due to technical (size) limitations. This is done automatically as part of the build process.

Deployment of the ARM templates can be done manually via the Azure portal, but a convenient Powershell script can also be created using the New-AzResourceGroupDeployment cmdlet.

As an example, the following structure can be used using this approach:

$azureSubscriptionId = "<subscription id>" $azureResourceGroup = "<resource group id>" $outputBasePath = "D:\VarigenceProjects\DeltaLakePoC\output"; $deploymentLabel = "bfx-dev-deltalake-demo-$(Get-Date -Format "yyyyMMddmmss")" $armTemplatePath = "$($outputBasePath)\DataFactories\bfx-dev-deltalake-demo\arm_template.json" $armTemplateParamsPath = "$($outputBasePath)\DataFactories\bfx-dev-deltalake-demo\arm_template_parameters.json" Set-AzContext -Subscription $azureSubscriptionId New-AzResourceGroupDeployment -Name $deploymentLabel -ResourceGroupName $azureResourceGroup -TemplateFile $armTemplatePath -TemplateParameterFile $armTemplateParamsPath

Scripts such as these are automatically created using BimlFlex-integrated solutions, because the necessary information is already available here. In this example, the script has been updated manually.

When updated, the script can be run to deploy the solution to Azure. For example using Visual Studio Code:

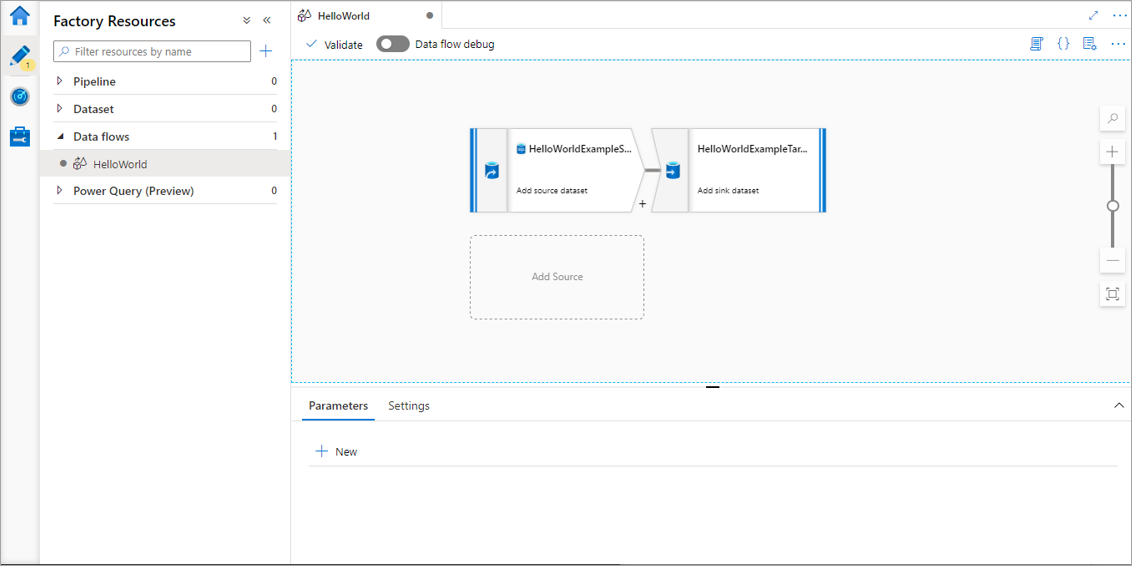

With the expected result in the target Data Factory:

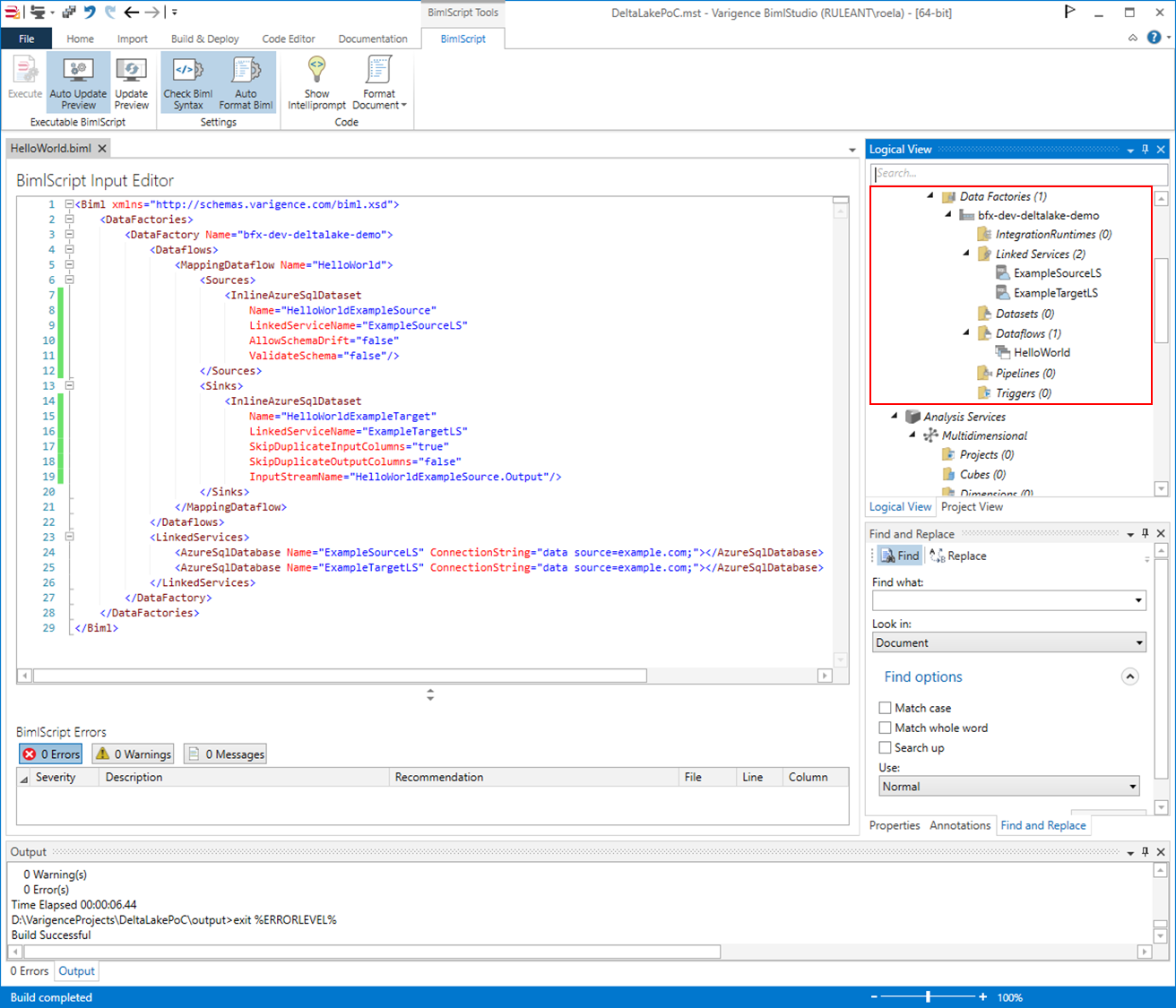

Dev Diary - Generating ADF Data Flow Mapping using Biml

The work to generate Data Flow Mappings in Azure Data Factory using the BimlFlex automation platform is nearing completion. While there is still more to do, there are also a lot of topics that are worth sharing ahead of this release.

The BimlFlex solution, as a collection of designs, settings, configurations and customizations is provided as Biml patterns that can be accessed from BimlStudio. This means that a dynamic preview of the expected (generated) output is visualized in BimlStudio, along with the supporting Biml code.

At this stage, and if required, BimlStudio allows for further customizations using Extension Points, which support a combination of Biml script, SQL or .Net code. These will become part of the data logistics solution that will be deployed.

The build process in BimlStudio will ‘compile’ the Biml code into native artefacts for the target platform and approach. In the case of this development diary, this will be as Data Flow Mappings for Azure Data Factory.

Using Data Flow Mappings has certain advantages (and disadvantages) compared to moving and transforming data using other Azure Data Factory components such as Copy Activities. Much of the remaining work to complete this feature is about finding the best mix between the available techniques, so that these can be generated from the design metadata.

One of the advantages of using Data Flow Mappings, aside from the visual representation of the data logistics, is the ability to use inline Sources and Sinks (targets). Inline datasets allow direct access to many types of data sources without a dedicated connector object (dataset). They are especially useful when the underlying structure may evolve. Also, and especially in data lake scenarios, they offer a way to manage where the compute takes place without requiring additional compute clusters.

It is an easy and fast way to use a variety of technologies.

In this post, and the subsequent posts as well, we will use this approach as a way of explaining working with Data Flow Mappings in BimlFlex.

Biml Data Flow Mapping syntax

Because the design metadata is provided as Biml script, it makes sense to start explaining the BimlFlex Data Flow Mapping support in BimlStudio because this is the best way to work with the Biml language.

Defining a Data Flow Mapping using Biml is easy. The various features, components and properties available in Data Flow Mappings are supported as Biml XML tags.

A Data Flow Mapping is referred to by using the MappingDataFlow segment. It is part of the Dataflows, which is in turn part of a DataFactory.

Consider the example below:

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<DataFactory Name="bfx-dev-deltalake-demo">

<Dataflows>

<MappingDataflow Name="HelloWorld">

<Sources>

<InlineAzureSqlDataset

Name="HelloWorldExampleSource"

LinkedServiceName="ExampleSourceLS"

AllowSchemaDrift="false"

ValidateSchema="false"/>

</Sources>

<Sinks>

<InlineAzureSqlDataset

Name="HelloWorldExampleTarget"

LinkedServiceName="ExampleTargetLS"

SkipDuplicateInputColumns="true"

SkipDuplicateOutputColumns="false"

InputStreamName="HelloWorldExampleSource.Output"/>

</Sinks>

</MappingDataflow>

</Dataflows>

<LinkedServices>

<AzureSqlDatabase Name="ExampleSourceLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

<AzureSqlDatabase Name="ExampleTargetLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

</LinkedServices>

</DataFactory>

</DataFactories>

In this ‘Hello World’ example, a single Data Flow Mapping is created with a single Source and a single Sink. Both the Source and the Sink are defined as inline datasets, which are specific options in Biml to distinguish them from regular datasets.

Inline datasets require a connection (Linked Service) to be assigned, and for a valid result this Linked Service must also be available.

When using BimlStudio to interpret this snippet, these components will be visible in the Logical View:

This skeleton code shows how Biml supports Data Flow Mappings, and inline data sources in particular. In the next post, we will expand this into a larger pattern and then look into how BimlFlex configurations influence this output.