Varigence Blog

The Top Five Challenges of Data Lakes and how to overcome them with a Data Lakehouse

Overview

BimlFlex's next version will support Databricks automation, allowing users to integrate Databricks into their data pipelines seamlessly, extending to all layers from Staging to Data Mart, and our preview has already been well-received by some of our customers. In addition, our upcoming blog post will provide details on how to configure and execute a fully automated Databricks solution.

This Databricks automation support is a significant development for businesses seeking to integrate Databricks into their existing data pipelines. By streamlining the integration process, users can save time and reduce costs. The resulting benefits of increased efficiency and improved data quality can help businesses remain competitive in a constantly evolving marketplace. In addition, BimlFlex with Databricks automation support ensures consistent and accurate data processing, ultimately leading to more robust data insights and decision-making for businesses of all sizes.

In our upcoming blog post, we'll dive into how to set up a fully automated Databricks solution. But first, let's discuss why this is significant.

Data Quality

Data lakes are a valuable source of data. However, their immense size and varied quality can be daunting. Accuracy is paramount when leveraging this vast resource for analytics; otherwise, decision-making may lead to unreliable results due to incomplete or inconsistent sources containing errors. Quality control and proper management must happen early if these powerful tools reach their potential.

Organizations can address data challenges by deploying robust validation and cleansing processes to ensure their data is accurate and consistent, ensuring accuracy, uniformity, and confidence in decision-making. First, ensure data quality by efficiently using Databricks or Azure Synapse Analytics to implement a Lakehouse. Next, transform your data into a uniform format and enhance accuracy with predefined rules for validating all values. Finally, Speed up your analysis process to ensure accuracy - implement BimlFlex automation and avoid the potential risks of formatting discrepancies.

Data Governance

Another challenge with data lakes is ensuring proper data governance. This includes controlling who has access to the data, enforcing data access policies, and tracking how the data is used. Without appropriate governance measures in place, a breach of security could put crucial information and your business objectives at risk.

BimlFlex automation for Databricks and Azure Synapse has advanced data governance features, and organizations can take proactive measures to secure their data and maintain high-quality standards. Implementing comprehensive tools such as access controls, lineage tracking, and discovery will ensure that companies meet the necessary security requirements while staying compliant with government regulations and leading to accurate results backed by reliable information.

Data Integration

Combining data from various sources and in different formats can be arduous. However, with the right preparation strategy, making sense of what would otherwise seem like chaos is possible. Cleaning and preparing the data for analysis can require significant time and effort.

Organizations can leverage Azure Data Factory and Databricks for cleansing & transformation to ensure the collected data is consistent, ready for analysis and optimally utilized. In addition, Databricks and Azure Synapse solutions enable corporations to explore their in-house trove of information with comprehensive data catalogue features that facilitate quick search capabilities allowing seamless content understanding and structure visibility!

Data Silos

Data lakes are a great source of organizational data, but they also present the risk of creating remote repositories that inhibit collaboration and waste resources. In addition, without visibility across departments, redundant effort can occur while crucial information remains in silos and ultimately diminishing the impactful potential of all corporate data. This can lead to duplication of effort and a lack of visibility into the available data across the organization.

Organizations can overcome data silos using BimlFlex to automate Databricks and Azure Synapse solutions creating a centralized data lakehouse. This single source creates an integrated and unified view of their data by providing easy access to different sources and departments. Additionally, it enables organizations to combine disparate datasets into one cohesive unit, allowing for more informed decisions based on actionable insights derived from accurate information. By implementing a data automation solution with BimlFlex, they can leverage valuable intelligence within their systems while achieving greater efficiency throughout operations due to improved collaboration among stakeholders in business-critical activities.

Complexity

Data lake implementation can be a formidable undertaking for organizations with limited personnel and budget. This can require specialized skills and resources, which can challenge some organizations. Employing automation with BimlFlex can cut costs drastically. Managed services like Databricks and Azure Synapse present a Lakehouse solution that simplifies implementation while minimizing associated overhead and leading to maximum savings without compromising performance!

Conclusion

A Data Lakehouse is a powerful data management platform that can help organizations store, process, and analyze large amounts of data in a scalable, secure, and efficient manner. Combining this with BimlFlex data solution automation, a Lakehouse for processing using Databricks or Azure Synapse can improve efficiency and scalability. If you are interested in automating your Lakehouse solution, we can help. Our expert team has extensive experience with the platform and can offer guidance on how to automate your data management processes best. Contact us today to learn more.

Contact us to take the next step. Request Demo or simply email sales@varigence.com

Automating Change Data Capture on Azure Data Factory: Streamline Your Data Integration with BimlFlex

Overview

BimlFlex is a metadata-driven framework that enables customers to automate data integration solutions. One of the key areas where it can provide significant value is automating Microsoft SQL Server Change Data Capture (CDC) on Azure Data Factory (ADF). By using BimlFlex, customers can create and deploy efficient, scalable, and reusable CDC solutions in ADF with significantly less to no manual effort, reducing errors and improving the overall efficiency of the process. In this blog post, we will discuss how BimlFlex can be used to automate CDC on ADF, the benefits of doing so, and provide a step-by-step guide for implementing CDC on ADF using BimlFlex.

Supported Target Platforms

BimlFlex offers robust support for various target platforms. The platform currently supports a range of target platforms that enable customers to automate data integration solutions, including Azure Synapse Analytics, Snowflake, Databricks, Azure SQL Database, Azure SQL Managed Instance, and Microsoft SQL Server. BimlFlex generates all the necessary artifacts required for optimal CDC data integration, including data pipelines, data transformations, stored procedure and notebooks.

In addition to providing reliable and efficient CDC automation, BimlFlex integrates seamlessly with popular source control solutions like GitHub and Azure DevOps. This integration makes it easy for customers to manage and track changes to their artifacts, ensuring smooth collaboration and minimizing errors.

By providing support for multiple target platforms, BimlFlex offers customers the flexibility to choose the platform that best fits their needs, and to integrate with other services, like ADF, to create more comprehensive and efficient solutions. Whether you are using Azure Synapse Analytics for large-scale data warehousing or Microsoft SQL Server for on-premise applications, BimlFlex provides a powerful and versatile framework that streamlines CDC automation, making data integration more efficient and reliable.

Requirements to Automate CDC on ADF

When implementing automation with BimlFlex for CDC on ADF, there are several things to consider to ensure the success of your data integration solution.

Full Initial Load

When implementing Change Data Capture (CDC) on Azure Data Factory (ADF), it's important to consider the initial load of data from non-CDC tables. This involves capturing the Log Sequence Number (LSN) as a high watermark for the following delta or change data capture loads. The LSN is a unique identifier that SQL Server uses to track changes made to the database, making it crucial to capture accurately. By ensuring a successful initial load and accurate capture of LSN, you can improve the effectiveness and reliability of your CDC process.

Overcoming Challenges with In-built CDC Functions

One of the most common issues with built-in CDC functions is the potential failure of the cdc.fn_cdc_get_all_changes_<capture_instance> function when it returns no rows within the given from_lsn and to_lsn range. Instead of simply producing no rows, this can cause the entire pipeline to fail. To avoid this, it's important to check for any changes in the process before proceeding. If there are no changes, the pipeline can be exited, and if there are changes, the main activity can be called to ingest and process the delta. Once this is done, the last LSN should be captured and saved as the high watermark for the next load, ensuring a successful and reliable CDC process.

Restarting CDC

In certain cases, you may need to restart CDC, such as when you need to compare and reload all data against your current dataset, which may occur when restoring your database or reinitializing change data capture on the source. To facilitate this process, BimlFlex offers the PsaDeltaUseHashDiff setting, which adds a RowHashDiff column that enables a full comparison when reinitializing CDC from the source. This feature can greatly simplify the restart process and ensure the accuracy and completeness of your data integration solutions.

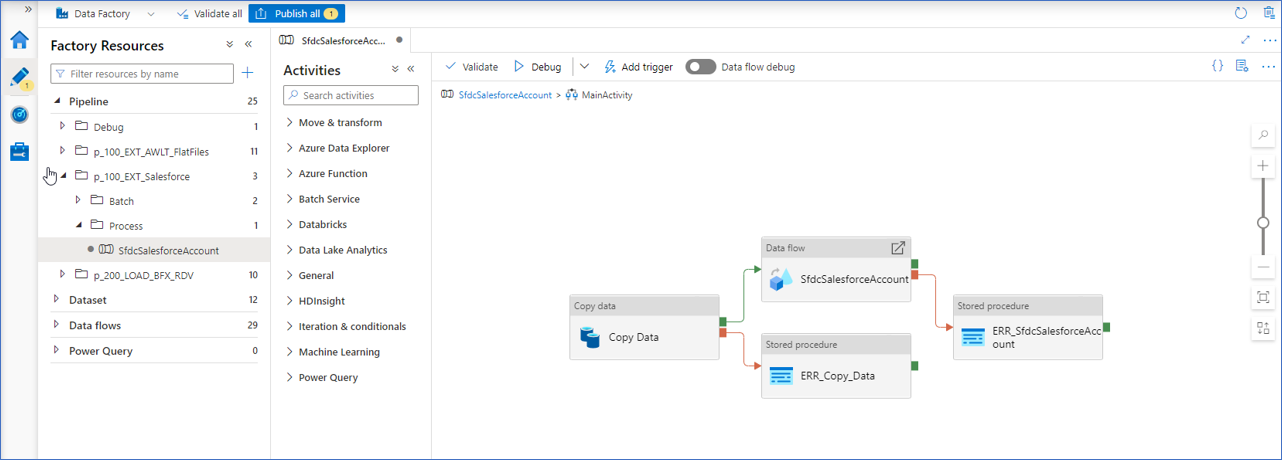

How BimlFlex Automate CDC for ADF

To handle the requirements of potential full reloads and the limitations of CDC functions in handling queries for no data returned, we separated the parameters (GetParameter) from the main activity (Main Activity). This separation ensures that the correct process - Initial Load, Delta Load or Full Reload - is determined based on the captured parameters, streamlining the process and avoiding potential errors. By doing so, the CDC automation process using BimlFlex becomes more efficient and reliable, resulting in greater productivity and accuracy.

The following graphic illustrates the implementation of the GetParameter activity that handles all the logic to set the __from_lsn, __to_lsn and IsFullLoad variables.

Lookup (Lkp__from_lsn)

This activity retrieves the previous load's high watermark value (__from_lsn) from the BimlCatalog or sets a default value '0x00000000000000000001' on IsInitialLoad.

SetVariable (Set__from_lsn)

This activity sets the value of the __from_lsn variable to the value retrieved Lkp__from_lsn activity.

@activity('Lkp__from_lsn').output.firstRow.VariableValue

SetVariable (SetIsFullLoad)

The activity sets the value of the IsFullLoad variable. The expression evaluates if the IsInitialLoad parameter is set to true or if the high watermark value ( __from_lsn) variable is equal to '0x00000000000000000001' the default value.

@if(or(pipeline().parameters.IsInitialLoad, equals(variables('__from_lsn'), '0x00000000000000000001')), true, false)

Script (Lkp__min_lsn)

This activity retrieves the minimum LSN value of <capture_instance> by running the sys.fn_cdc_get_min_lsn function. The default value of __min_lsn is 0x00000000000000000001.

SELECT ISNULL(CONVERT(NVARCHAR(256), sys.fn_cdc_get_min_lsn ('<capture_instance>'), 1), '0x00000000000000000001') AS [__min_lsn]

SetVariable (Set__min_lsn)

This activity sets the value of the __min_lsn variable to the value retrieved in the Lkp__min_lsn Script activity.

@activity('Lkp__min_lsn').output.resultSets[0].rows[0].__min_lsn

Script (Lkp__max_lsn)

This activity executes and retrieves the maximum value of __max_lsn by running the sys.fn_cdc_get_max_lsn function. The default value of __max_lsn is '0x00000000000000000001'.

SELECT ISNULL(CONVERT(NVARCHAR(256), sys.fn_cdc_get_max_lsn(), 1), '0x00000000000000000001') AS [__max_lsn]

SetVariable (Set__max_lsn)

This activity sets the value of the __max_lsn variable to the value retrieved in the Lkp__max_lsn Script activity.

@activity('Lkp__max_lsn').output.resultSets[0].rows[0].__max_lsn

Script (Lkp__to_lsn)

This activity retrieves the value of __to_lsn by running the sys.fn_cdc_map_time_to_lsn function. The value of __to_lsn is determined by the pipeline parameter BatchStartTime and a default value of '0x00000000000000000001'.

@concat('SELECT ISNULL(CONVERT(NVARCHAR(256), sys.fn_cdc_map_time_to_lsn(''largest less than or equal'', ''', formatDateTime(pipeline().parameters.BatchStartTime, 'yyyy-MM-dd HH:mm:ss.fff'), '''), 1), ''0x00000000000000000001'') AS [__to_lsn]')

SetVariable (Set__to_lsn)

This activity sets the value of the __to_lsn or next load high watermark. There is quite a lot going on, and it depends on the SetIsFullLoad, Set__min_lsn, Set__max_lsn and Lkp__to_lsn activities. This expression first checks whether the IsFullLoad variable is true and, if so, returns the value of the __max_lsn variable. If IsFullLoad is false, the expression evaluates if the __to_lsn value is '0x00000000000000000001', the expression returns the value of the __min_lsn variable. Otherwise, it returns the __to_lsn value.

@if(variables('IsFullLoad'), variables('__max_lsn'), if(equals(activity('Lkp__to_lsn').output.resultSets[0].rows[0].__to_lsn, '0x00000000000000000001'), variables('__min_lsn'), activity('Lkp__to_lsn').output.resultSets[0].rows[0].__to_lsn))

```

SetVariable (Reset__from_lsn):

This activity sets the value of the __from_lsn variable if the current high watermark value __from_lsn is smaller than the low endpoint, __min_lsn of the capture instance variables.

@if(less(activity('Lkp__from_lsn').output.firstRow.VariableValue, variables('__min_lsn')), variables('__min_lsn'), activity('Lkp__from_lsn').output.firstRow.VariableValue)

Conclusion

The blog post discusses the benefits of using BimlFlex for automating CDC on ADF, which can save time, reduce errors and improve the overall efficiency of the process. It also outlines key considerations for implementing automation with BimlFlex for CDC on ADF, including full load or initial load, overcoming challenges with in-built CDC functions, and restarting CDC. The post also gives you a behind the scenes look at the generated output and explains how the GetParameter and Main Activity can be separated to ensure the correct process is being executed.

BimlFlex provides a powerful metadata-driven framework that simplifies the implementation of CDC on ADF, enabling customers to create scalable and reusable solutions. It also provides a step-by-step guide for implementing CDC on ADF using BimlFlex, ensuring a seamless and reliable process.

We encourage customers to try BimlFlex for themselves and take advantage of the benefits it provides. Additional resources are available for further learning, including the BimlFlex documentation, webinars, and tutorials.

Contact us to take the next step. Request Demo or simply email sales@varigence.com

Combining DBT with BimlFlex

During our travels, we are regularly asked how BimlFlex compares against DBT. Our BimlFlex data solution automation framework would be compared against all our competitors, of course, but DBT is the odd one out in this case. It is worth covering this in detail.

This is because BimlFlex and DBT are not really competing. In fact, they can complement each other.

DBT stands for ‘Data Build Tool’ and is an effective approach for organizing data pipelines using simple configuration files and a Command Line Interface.

At a high level, DBT works by defining a project that contains ‘models’. Models contain SQL files that are saved to a designated directory where DBT can find them. A project itself references a ‘profile’ which contains connectivity information, and DBT will look after deployment. Models can be referenced to each other to construct a data logistics pipeline with separate steps, which can be visualized as a data logistics lineage graph.

The fact that everything (i.e. configuration files, model files) are stored in plain text (i.e. not as binaries) means that it’s a good match for data solution automation framework – which BimlFlex provides.

As a software for managing orchestration and deployment for data logistics processes, DBT does not provide a framework or patterns out of the box. It comes with templating engine support (Jinja) that allows users to define their own SQL templates, but users would have to provide the patterns themselves as well as the framework to design and manage the metadata that would power those templates.

In short, it does not provide a data solution automation framework, and this is where BimlFlex comes in.

BimlFlex contains production-ready templates for common architectures as well as a Graphical User Interface (GUI) to manage the models and metadata. BimlFlex compiles this design metadata into executable artifacts for various target platforms – including the Microsoft Database family, Synapse, Snowflake and Databricks. BimlFlex can natively generate fully-fledged Execute Pipelines and Mapping Data Flows, but it can also generate SQL Stored Procedures.

If BimlFlex would be combined with DBT, data teams would be able to leverage DBT’s strengths but not have to invent or manage their own automation framework and metadata management solution.

With some configuration you can configure BimlFlex to generate data logistics processes to a directory that is configured for DBT and manage orchestration there.

Options for using BimlFlex with DBT

Even though BimlFlex’s automation framework is a good fit for DBT, there are a few approaches you can take which may require some customization of the BimlFlex output.

Because DBT’s workflow uses Create Table As Select (CTAS) statements in queries or common table expressions (CTEs) to define tables, it does not directly support table using Data Definition Language (DDL) statements. For the same reason, Stored Procedures are usually not part of the DBT workflow either. In DBT, this is managed through SQL SELECT statements.

A possible approach is to use DBT to execute the BimlFlex-generated Stored Procedures as Macros. This means that the procedure requires a wrapper such as in this example:

{% macro output_message(return code) %}

<Stored Procedure>

call output_message();

{% endmacro %}

In BimlFlex, this can be done using the Extension Points for the Stored Procedures.

Using more advanced use of Extension Points and Bimlscript, it is also an option to modify the procedure into one or more SQL statements or common table expressions. This will remove the procedure headers and use C# or Visual Basic to spool the results into DBT model files.

Data sources can be emitted using the same techniques to represent the DBT source Yaml files, for example:

sources:

- name: AdventureWorksLT

tables:

- name: address

- name: customer

Of course, it is also possible to combine the strengths of BimlFlex and DBT in different ways. You can use the standard BimlFlex features to generate Execute Pipelines, SSIS Packages or Mapping Data Flows to connect to the data sources, and load these into a staging layer from where DBT can pick up the data logistics using BimlFlex generate SQL statements.

If you want to know more, please reach out to the BimlFlex team to see how you can combine the power of BimlFlex’ code generation with DBTs orchestration and lineage capabilities.

Dev Diary - Initial Extension Points added to Mapping Data Flows

Work on expanding our support for Mapping Data Flows is progressing, and the latest additions introduce the first Extension Points to our generated Mapping Data Flow output.

Extension Points allow BimlFlex users to 'inject' bespoke logic into the standard templates that are generated out of the box. Using Extension Points, it is possible to add, remove or modify the way the templates operate to cater for circumstances that are unique to specific projects.

In principle, the complete .Net framework is available by using BimlScript, but in most cases it will be sufficient to add one or more transformations, or to direct data to go down a different path in the processing.

Extension Points are available for various templates and support different technologies, but for Mapping Data Flows this was previously not yet available - until now!

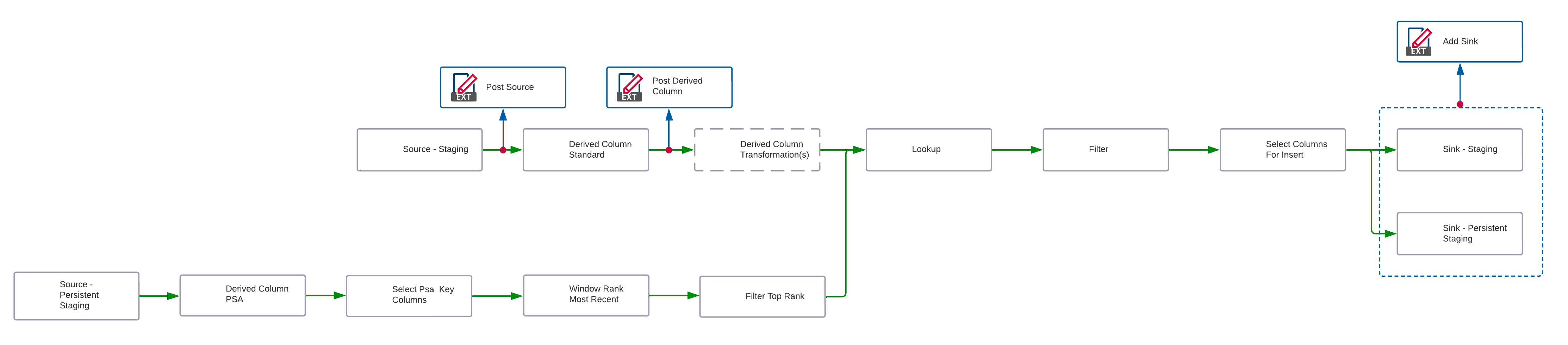

The first (three) Extension Points for Mapping Data Flows have now been added for our 'source-to-staging' templates:

- Post Copy

- Post Derived Column

- Add Sink

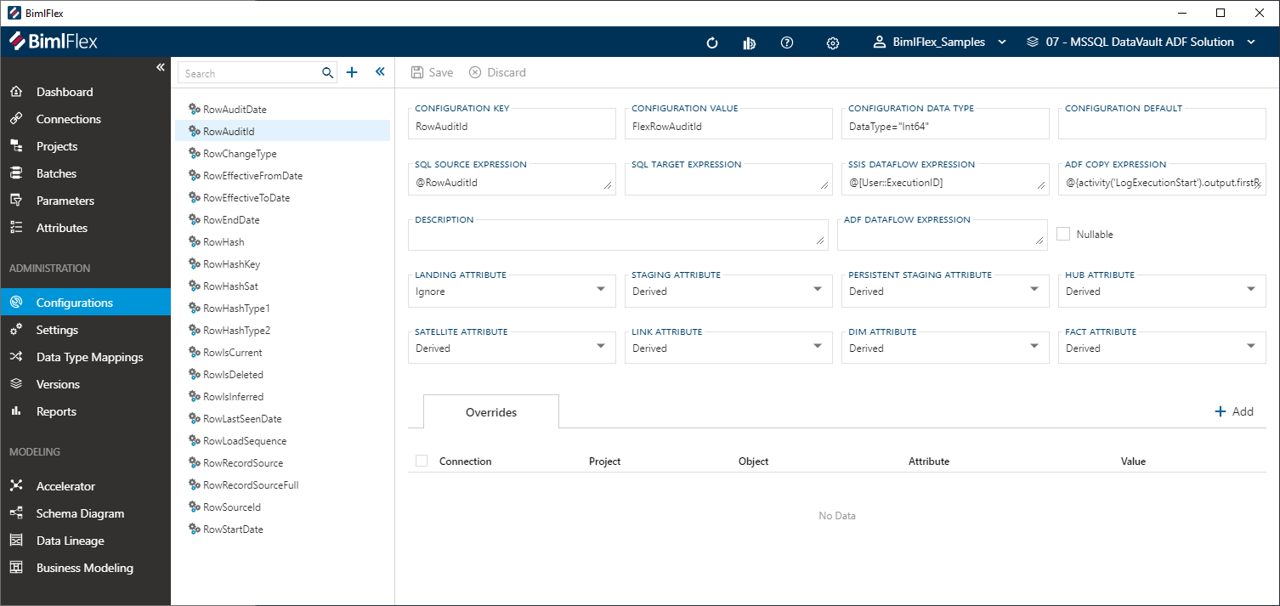

The following diagram shows where these tie into the regular template:

This feature allows for various new scenarios to be supported using Mapping Data Flows.

For example, you can add a Sink and connect it to any transformation in the data flow. Or, you can add custom columns to be added to the data flow at various spots in the process.

These new Extension Points can be added as always using BimlStudio. Because we mean to add many more, we have created a new Mapping Data Flows category for this:

More Extension Points will be added over time, but for now these extension points will be available as part of our BimlFlex 2022 R2 (May 2022) release.

Dev Diary - Embracing the new Azure Data Factory Script Activity

Earlier this month, the Azure Data Factory (ADF) team at Microsoft release a new way to execute SQL statements in a pipeline: the Script Activity.

The Script Activity allows you to execute common SQL statements to modify structures (DDL) and store, retrieve, delete or otherwise modify data (DML). For example, you can call Stored Procedures, run INSERT statements or perform lookups using queries and capture the result. It complements existing ADF functionality such as the Lookup and Stored Procedure pipeline activities.

In some cases though, the Lookup and Stored Procedure activities have limitations that the new Script Activity does not have. Most importantly, the Script Activity also supports Snowflake and Oracle.

This means that additional customizations to call Stored Procedures on Snowflake (and lookups, to an extent) are no longer necessary. You can simply use the Script Activity.

Varigence has been quick to adopt this new feature, which will be available in the upcoming 2022 R2 release (available early April). As part of 2022 R2, the Script Activity is supported in BimlScript, and the BimlFlex patterns have been updated to take advantage of this new ADF functionality.

A major benefit is that a single approach can be used for all lookup and procedure calls. And, specifically for Snowflake targets, it is no longer necessary to use the Azure Function Bridge that is provided by BimlFlex to run procedures and queries on Snowflake. Even though the Azure Function Bridge has been effective -and is still in use for certain features that ADF does not yet support directly - we prefer to use native ADF functionality whenever possible because this offers the most flexibility and ease of maintenance for our users.

Below is an example of the changes in the patterns that you can expect to see in the upcoming 2022 R2 release. In this example, the Azure Function call has been replaced by a direct script call to Snowflake.

Dev Diary - Pushing down data extraction logic to the operational system environment

In some scenarios, when a 'source' operational system shares the technical environment with the data solution, it can be efficient to make sure all processing occurs on this environment. This way, the data does not have to 'leave' the environment to be processed.

In most cases, operational systems are hosted on different environments than the one(s) that host the data solution, which means that data first needs to be brought into the data solution for further processing.

If, however, the environment of an operational system is the same as that of the data solution, this initial 'landing' or 'staging' step does not require the data to be processed via an external route. In other words, it becomes possible to 'push down' data staging logic to this shared environment. This way, the delta detection and integration of the resulting data delta into the data solution all occurs in the environment, and not by using separate data transformations in for example SQL Server integration Services (SSIS) or Azure Data Factory (ADF).

Pushdown is sometimes referred to as 'ELT', (Extract, Load and then Transform) as opposed to the 'ETL' (Extract, Transform and then Load) paradigm. While these terms may not always be clear or helpful, generally speaking ELT / pushdown means that most code is directly running on the native environment whereas ETL signals most code is running as part of a separate transformation engine not directly related to the environment.

Currently in BimlFlex, the source-to-staging processes that follow the ELT paradigm always use either a SSIS or an ADF Copy activity to move the data onto the data solution environment before the processing can start. In the scenario outlined above, when the operational system is hosted on the same environment as the data solution, this landing step can be skipped.

With the 2022 R2 release, it will now be possible to use the new Pushdown Extraction feature to deliver this outcome, and make sure the integration of data in the solution can be achieved without requiring the additional landing step or Copy Activity.

Enabling Pushdown Extraction at Project level will direct BimlFlex to generate Stored Procedures that replace the initial landing of data using SSIS and/or ADF.

Upcoming Business Modeling webinars

We believe Business Modeling is a feature that is core to delivering lasting and manageable data solutions. To promote, explain and discuss this feature -and the planned improvements- we have organized a number of webinars.

If this is of interest, please register now for the first two webinars. These presentations will focus on the benefits of creating a business model, the structured approach to do so, how to map data to the business model, and much more.

Each webinar has US (EST) and EU/AUS (CET) time slots. The sessions will be presented live and recordings will also be made available afterwards.

More details here.

Dev Diary - Customizing file paths for Delta Lake

The Delta Lake storage layer offers interesting opportunities to use your Azure Data Lake Gen2 storage account in ways that are similar to using a database. One of the drivers for BimlFlex to support Azure Data Factory (ADF) Mapping Data Flows ('data flows') is to be able to offer a 'lakehouse' style architecture for your data solution, using Delta Lake.

BimlFlex supports delivery a Delta Lake based solution by using the 'Mapping Data Flows' integration template (currently in preview). This integration template directs the BimlFlex engine to deliver the output of the design metadata as native data flows. These can then be directly deployed to Azure Data Factory.

In BimlFlex, configuring the solution design for Delta Lake works the same way as using any other supported connection. The difference is that the connection maps to an Azure Data Lake Gen2 Linked Service. The container specified in the connection refers to the root blob container in the data lake resource.

Recently, we have added additional flexibility to configure exactly where the files are placed inside this container. This is important, because directories/folders act as 'tables' in Delta Lake if you were to compare it to a database.

So, even though you may still configure different connections at a logical level in BimlFlex (e.g. to indicate separate layers in the design), they can now point to the same container, using separate directories to keep the data apart. You can have your Staging Area and Persistent Staging Area in the same container, in different directories. Or you can place these in the same container and directory using prefixes to identify each layer. And you can now add your Data Vault and Dimensional Model to the same container as well.

This way, it is now supported to define where your 'tables' are created in your Delta Lake lakehouse design, using BimlFlex.

Dev Diary - Configuring load windows and filters for Mapping Data Flows

When developing a data solution, it is often a requirement to be able to select data differentials ('deltas') when receiving data from the operational systems that act as the data 'source'.

This way, not all data is loaded every time a given source object (table, or file etc.) is processed. Instead, data can be selected from a certain pointer, or value, onwards. The highest value that is identified in the resulting data set selection can be maintained in the data logistics control framework. It is saved in the repository, so that the next time the data logistics process starts, the data can be selected from this value onwards again.

This is sometimes referred to as a high water mark for data selection. Every time a new data differential is loaded based on the previously known high water mark, a new (higher) water mark is recorded. This assumes there is any data available for the selection, otherwise the high water mark remains as it was. The process is up-to-date in this case. There is nothing to do until new data changes arrive.

The range between the most recent high water mark and the highest value of the incoming data set can be referred to as the load window. The load window, as combination of a from- and to value, is used to filter the source data set.

To implement this mechanism, the data logistics process first retrieves the most recent high water mark from the repository (the 'from' value). Then, the data source is queried to retrieve the current highest possible value (the 'to' value). The 'from' and 'to' values define the load window, and are added to the selection logic that connects to the data source.

If the 'from' and 'to' values are the same, there is no new data to process. The selection can be skipped. In this scenario, there also is no need to update the high water mark for the next run. If the data retrieval was to be executed, it would not return data anyway.

When the 'to' value is different, this is updated in the repository.

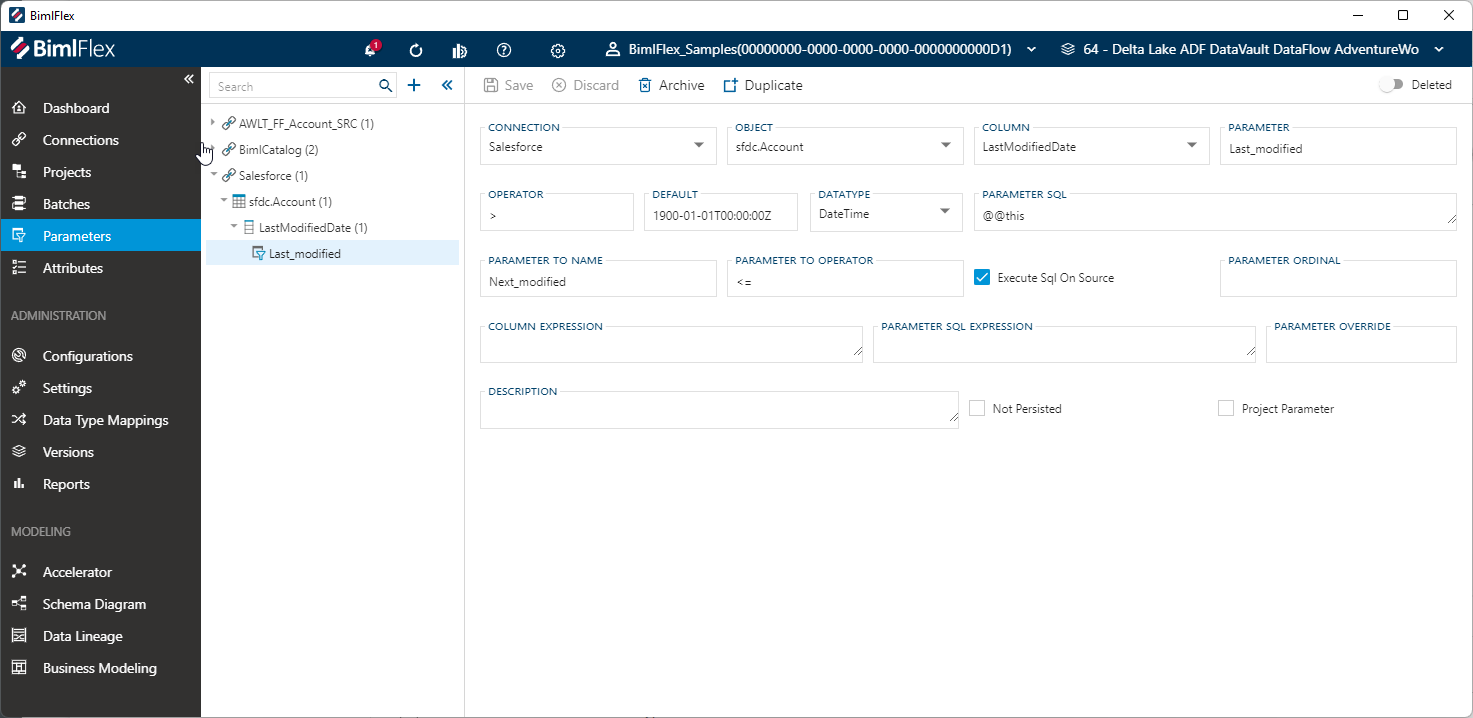

Configuring load windows in BimlFlex

In BimlFlex, this mechanism can be implemented using parameters. Parameters are essentially filters that can be used to limit the data selection for the object that they apply to.

A parameter can have a 'from' and a 'to' component, or only a 'from' one. If both a specified, BimlFlex will treat this as a load window. If only the 'from' part is specified it will be treated as a filter condition.

In the example below, the Salesforce 'Account' object has a parameter defined for the 'LastModifiedDate' column. The 'from' name of this value is 'Last_modified', and the 'to' name is 'Next_modified'.

Because Salesforce is an unsupported data source in Mapping Data Flows ('data flows'), the data logistics that is generated from this design metadata will include a Copy Activity to first 'land' the data. Because this is the earliest opportunity to apply the load window filter, this is done here. Driven by the parameter configuration, the query that will retrieve the data will include a WHERE predicate.

In addition, BimlFlex will generate 'lookup' procedures that retrieve, and set, the high water mark value using the naming specified in the parameter.

Direct filtering in Mapping Data Flows

In regular loading processes, where the data flow can directly connect to the data source, the Copy Activity is not generated. In this case, the filtering is applied as early as possible in the data flow process. When this can be done depends on the involved technologies.

For example, using a database source it is possible to apply this to the SQL SELECT statement. When using inline processing for Delta Lake or file-based sources this is done using a Filter activity.

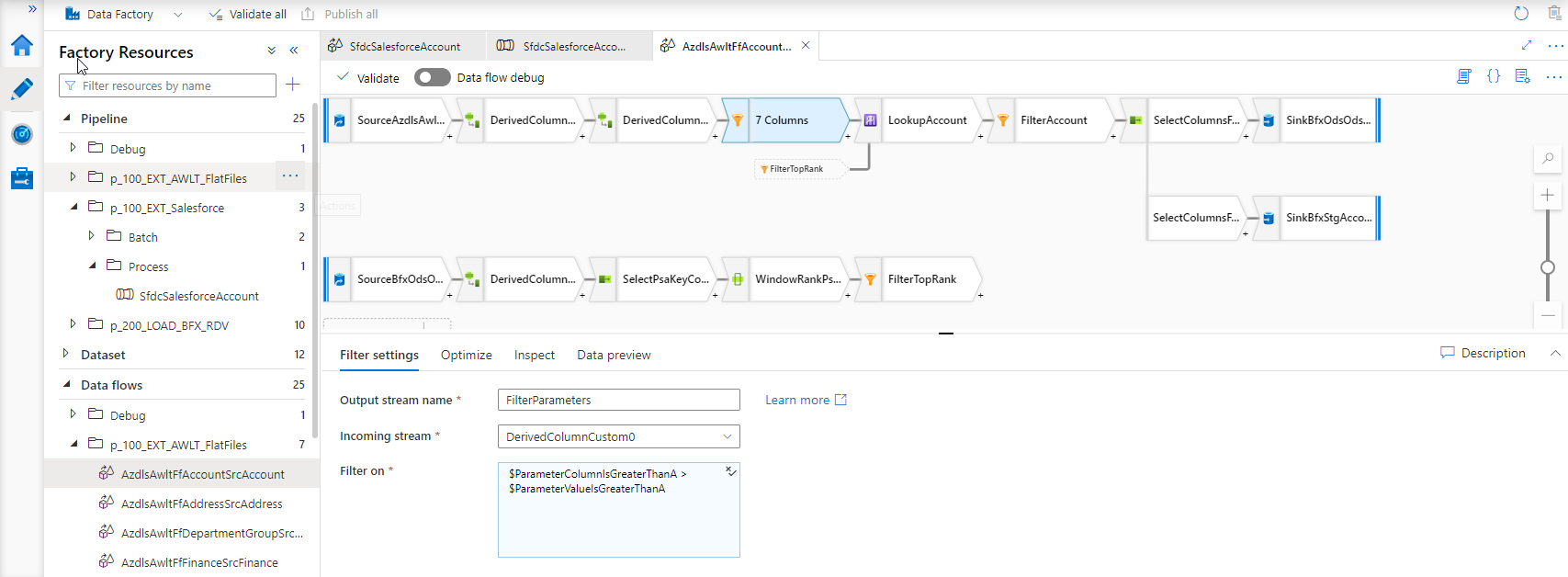

For example, as displayed in this screenshot:

.

.

True to the concepts outlined in earlier blog posts, the BimlFlex parameters are themselves defined as Mapping Data Flow parameters to allow for maximum flexibility. The executing ADF pipeline will provide these to the data flow. The parameter values are passed down into the data flow.

Next steps

At the time of writing, database sources have not been optimized to push the selection to the data source as a WHERE clause. So, the SELECT statement is not yet created for database sources to accommodate filtering here - which is conceptually the most performant outcome. However, the filtering as per the above screenshot already works so, functionally, the output is the same.

It is equally possible to now define load windows on file-based data sources, and this is also an area to investigate further. Using data flows, the BimlFlex parameter concept for generating load windows is not restricted to database sources any longer.

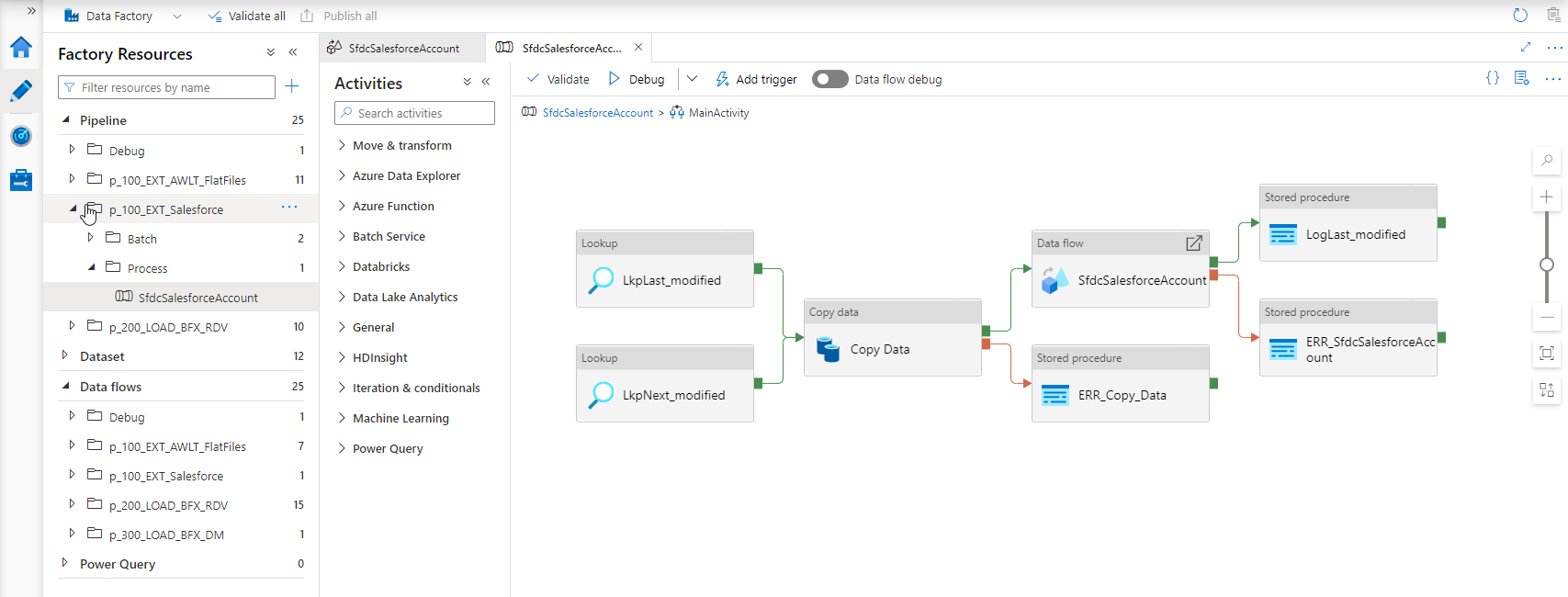

Dev Diary - Connecting to unsupported data sources using Mapping Data Flows and BimlFlex

When configuring BimlFlex to use Mapping Data Flows ('data flows'), the generated data flow will in principle directly connect to the configured Source Connection and process the data according to further configurations and settings.

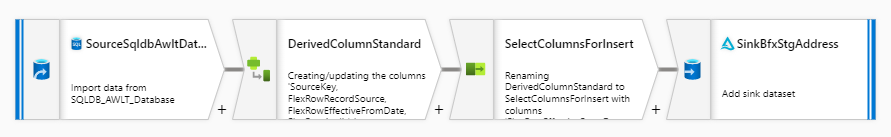

For example, to initially 'stage' data into the data solution patterns such as the ones below can be generated:

In both these cases, however, this relies on data flows to actually be able to directly connect to the data source. But, this is not always supported. The following link to the Microsoft documentation shows which technology is supported by the various areas of Azure Data Factory (ADF).

As Varigence, we still want to be able to enable our users to mix-and-match the BimlFlex project configuration using our supported data sources - even though this may not (yet) be supported directly by the ADF technology in question.

For example, BimlFlex supports using Salesforce REST APIs as data source, and this can be used to generate ADF pipelines that stage and process data further. If you would want to use data flows to implement complex transformations -for example using derived columns- for a Salesforce data source that would be an issue.

To make sure our users can still use data flows for data sources that are not (yet) natively supported, the BimlFlex engine has been updated to detect this. If this is the case, the generated output will include additional processing steps to first 'land' the data into a data lake or blob storage, and then process the data from here using data flows.

In practice, this means that an additional Copy Data activity will be added to the ADF pipeline that starts the data flow. The ADF pipeline acts as 'wrapper' for the data flow and already includes the interfaces to the runtime control framework (BimlCatalog).

To summarize if a BimlFlex is configured to use data flows and - contains a source connection that is supported, then a direct connection will be generated - contains a source connection that is not support, then an additional landing step will be generated

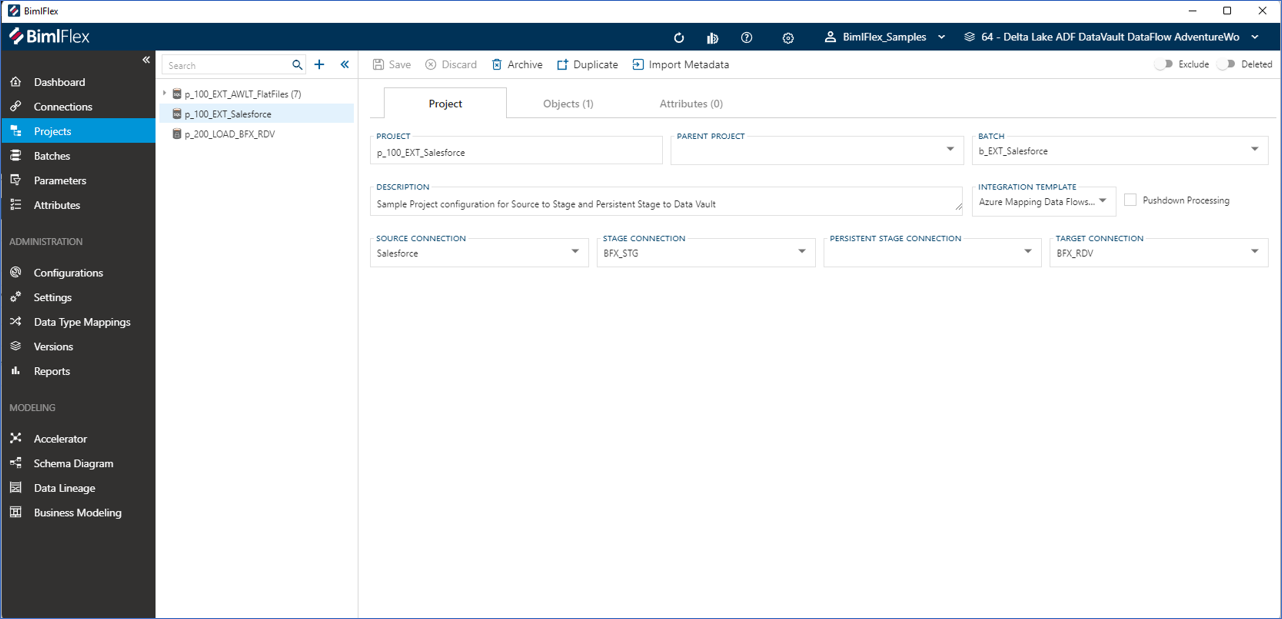

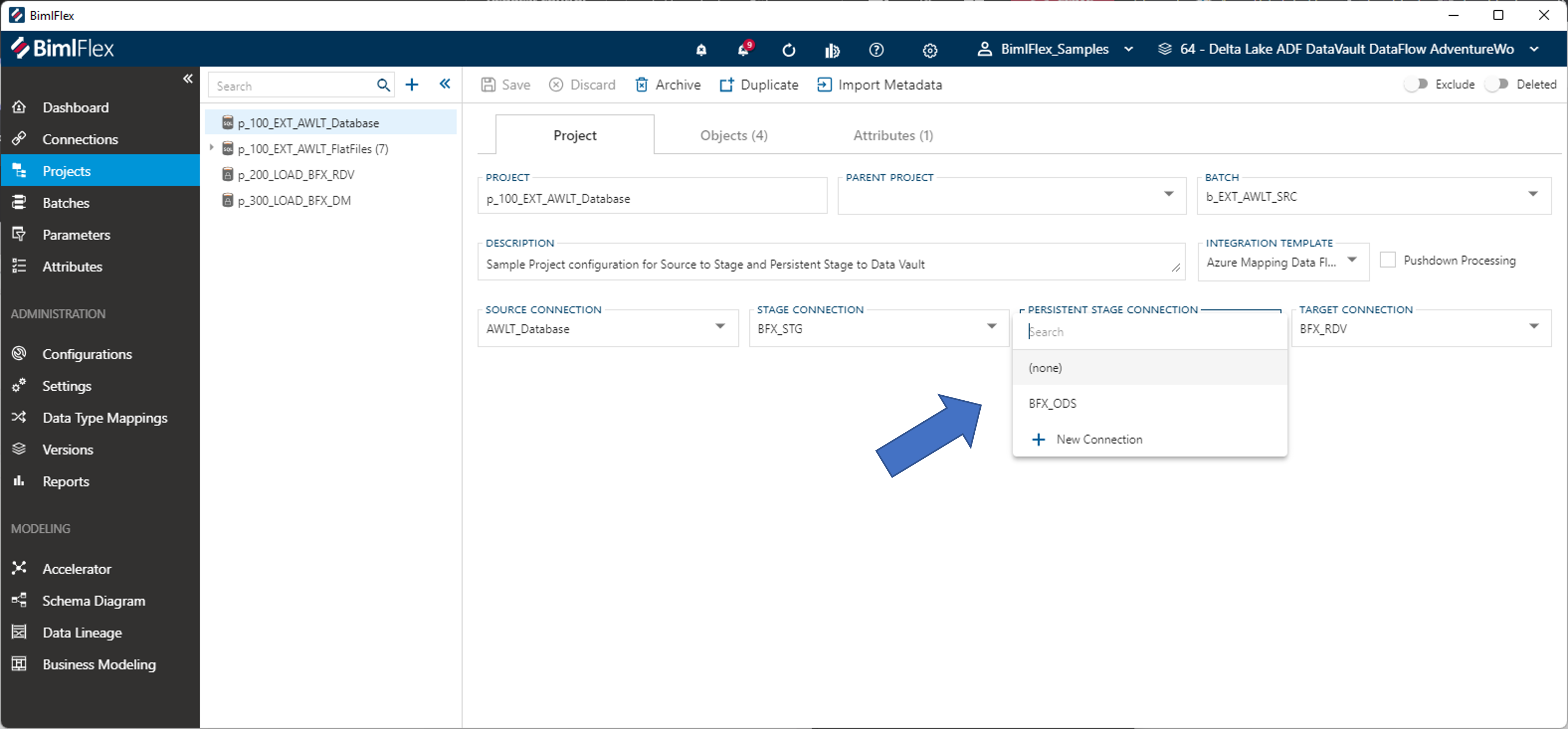

Consider the following project configuration:

In this example, a Salesforce connection is configured as source. A staging connection is also defined and ultimately the data will be integrated into a Data Vault model. No persistent staging area connection is configured for this project. Note that the integration stage is set to Mapping Data Flows. Because Salesforce connections are not natively supported for data flows, a landing step will be added by BimlFlex.

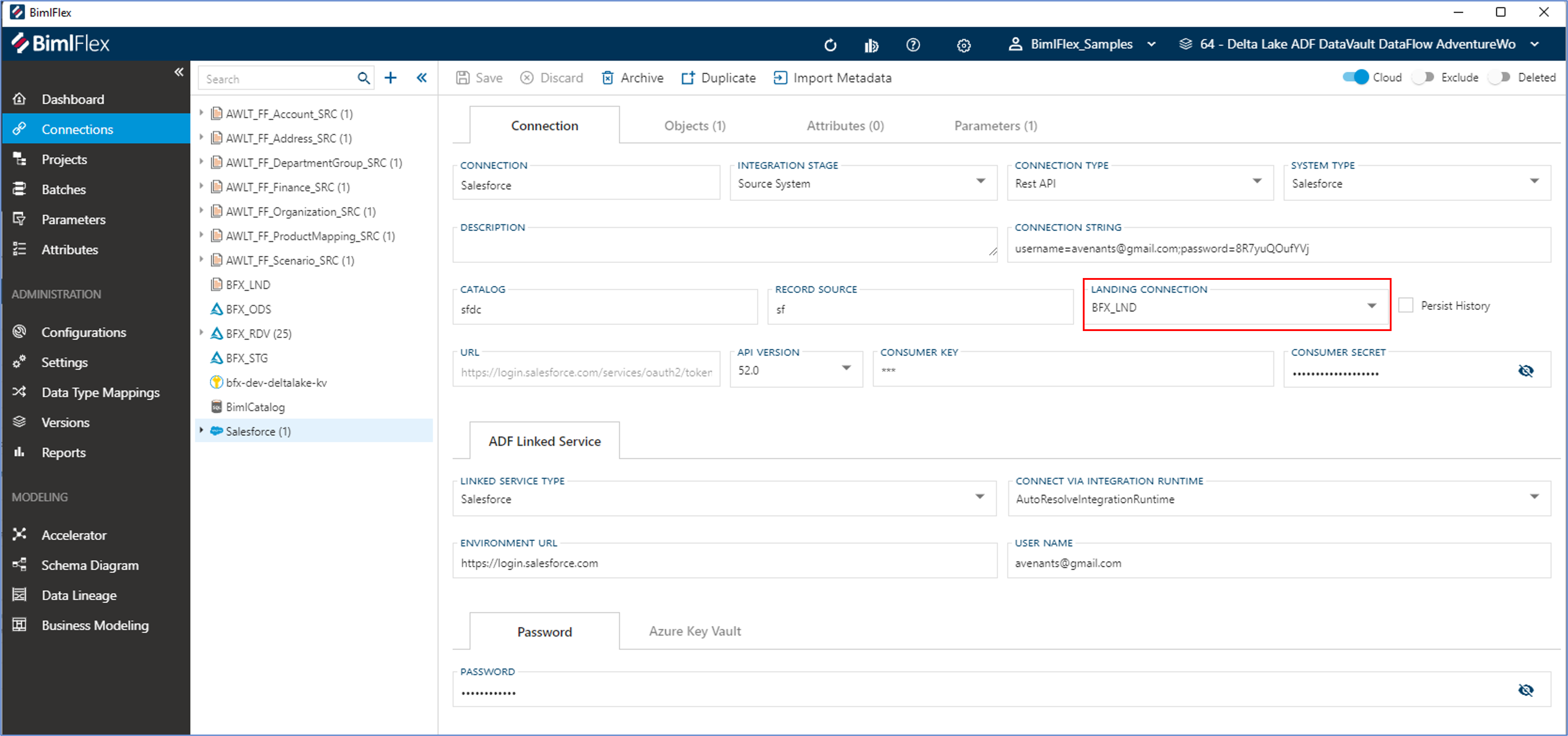

To direct BimlFlex where to land the data, the source connection will need to be configured with a landing connection. This is shown in the screen shot below.

The result in the generated output will appear as per the screenshot below. The Copy Data activity will land the data before the data flow will process these to the staging area.

Dev Diary - Adding Transformations to Mapping Data Flows

The Mapping Data Flows ('data flows') feature of Azure Data Factory (ADF) provides a visual editor to define complex data logistics and integration processes. Data flows provides a variety of components to direct the way the data should be manipulated, including a visual expression editor that supports a large number of functions.

Work is now nearing completion to make sure BimlFlex can incorporate bespoke logic using this expression language, and generate the corresponding Mapping Data Flows.

For every object in every layer of the designed solution architecture, it is possible to add derived columns. Derived columns are columns that are not part of the 'source' selection in a source-to-target data logistics context. Instead, they are derived in data logistics process itself.

This is done using Derived Column Transformations in Mapping Data Flows, in a way that is very similar to how this works in SQL Server Integration Services (SSIS).

In BimlFlex, you can define complex transformation logic this way using the data flow expression syntax. The resulting code will be added to the selected Mapping Data Flow patterns, and visible as Derived Column Transformations. You can also define dependencies between derived columns, for example that the output of a calculation is used as input for the next one.

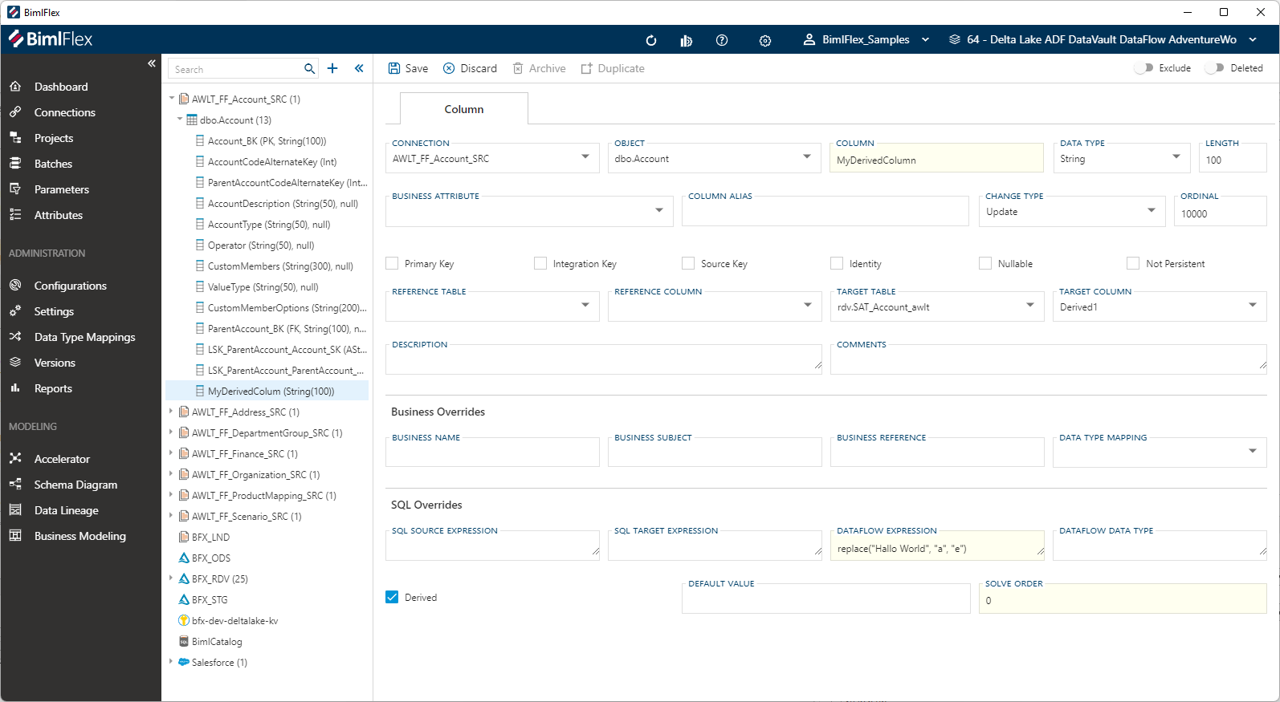

Consider the screenshot below.

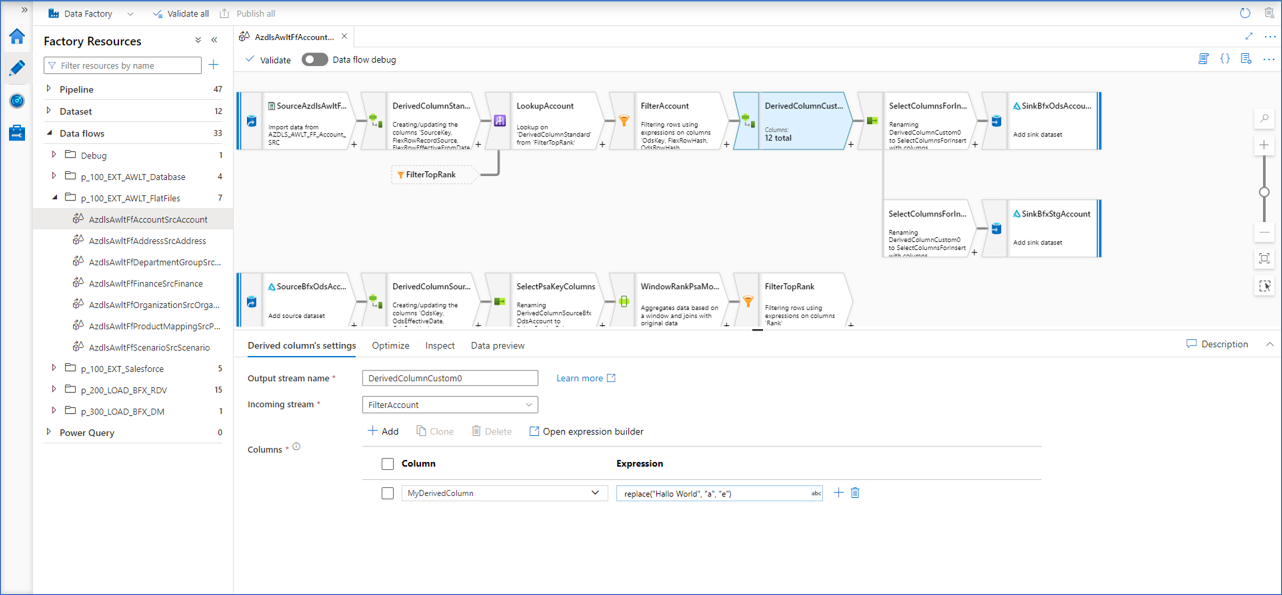

The column 'MyDerivedColumn' is defined as part of the 'Account' object. The 'IsDerived' checkbox is checked, and the 'Dataflow Expression' is provided. This metadata configuration will generate a Derived Column Transformation with this column name, and the expression.

The resulting column is added to the output dataset.

In the first screenshot, the 'solve order' property was also set - with a value of 0.

The solve order directs BimlFlex in generating the logic in a certain (incremental) order. So a lower solve order will be created as an earlier Derived Colum Transformation, and a higher solve order later. The exact numbers do not matter, only that some are higher or lower than others.

If multiple derived columns are defined with the same solve order, they will be generated in the same Derived Column Transformation. This makes it possible to break apart complex logic in separate steps, and use them for different purposes.

This is one way to allow potentially complex logic to be defined in the metadata, and use this to generate consistent output that meets the requirements.

Delivering data from a Data Vault

As part of our recent 2022 R1 release there have been countless updates to our reference documentation. In the background, much of this is generated from our BimlFlex code and is also available now as tooltips and descriptions that are available when working with BimlFlex day-to-day.

Improvements have been made across the board for our documentation websites, and certain elements have been highlights in this blog. For example our updated documentation on Extension Points.

There are two areas worth mentioning specifically around Data Vault implementation, and delivering results using the 'Business' Data Vault concept and into Dimensional Models.

Have a look at our updated Data Vault section, and specifically how to implement the following using BimlFlex:

BimlFlex 2022 R1 is available!

We are super excited to announce the newest version of BimlFlex!!!

The 2022 R1 release introduces the Business Modeling feature, adds support for many new source systems, and improvements of the overall experience with various bug fixes.

See what has been done here in our release notes.

This release is the culmination of many months of hard work by the team, and it is a major step forward in making the BimlFlex approach as easy and encompassing as it can be.

Supporting the release, many documentation upgrades have been done in our reference documentation sits as well - so check it out!

Dev Diary – Generating a Mapping Data Flow Staging process without a Persistent Staging Area

A few weeks ago, we covered the generation of a Mapping Data Flows Staging process for Azure Data Factory (ADF), using BimlFlex. This process uses a Persistent Staging Area (PSA) to assert if incoming data is new or changed by implementing a lookup and comparison.

There are some obvious limitations to this particular pattern. For example, this pattern does not support detecting records that have been physically deleted from the data source (a ‘logical’ delete), which may happen for certain data sources. The lookup to the PSA is the equivalent of a Left Outer Join in this sense.

And, generally speaking, not everyone needs or wants to implement a PSA in the first place.

This initial Staging pattern is just one of many patterns that are added to the Mapping Data Flows feature, because being able to use multiple patterns is necessary to develop and manage a real-world data solution.

It is not uncommon for a data solution to require various different Staging patterns to meaningfully connect to the source data across potentially many applications. Sometimes, multiple Staging patterns are needed even for a single data source.

This is because individual data sets may require to be accessed and staged in specific ways, such as for example the ability to detect the deleted records that were mentioned earlier. Some source data sets benefit from this ability, where others may explicitly not want this logic in place because this may result in a performance penalty without any functional gains.

Using BimlFlex, the design and configuration of the data solution directs what patterns will be used and where they are applied. In other words, different configurations result in different patterns being generated.

An example of how modifying the design in BimlFlex will change the Staging pattern is simply removing the PSA Connection from the Project.

If there is a PSA Connection in place, the Mapping Data Flow will be generated with the PSA lookup and target (sink). Once you remove this Connection, the pattern that will be used is more of a straightforward data copy.

The team at Varigence is working through these and other configuration to make sure the necessary patterns are covered, including delete detection, landing data in blob storage and much more.

Creating custom column-level transformations in BimlFlex

In the real world, many data solutions require a high degree of flexibility so that they can cater to unique scenarios.

This can be necessary because of specific systems that require integration, or simply because certain data needs bespoke logic to be interpreted correctly. BimlFlex offers ways to specify specific transformations at column level - using Custom Configurations. This is one of various features that allow a high level of customization for designing a data solution.

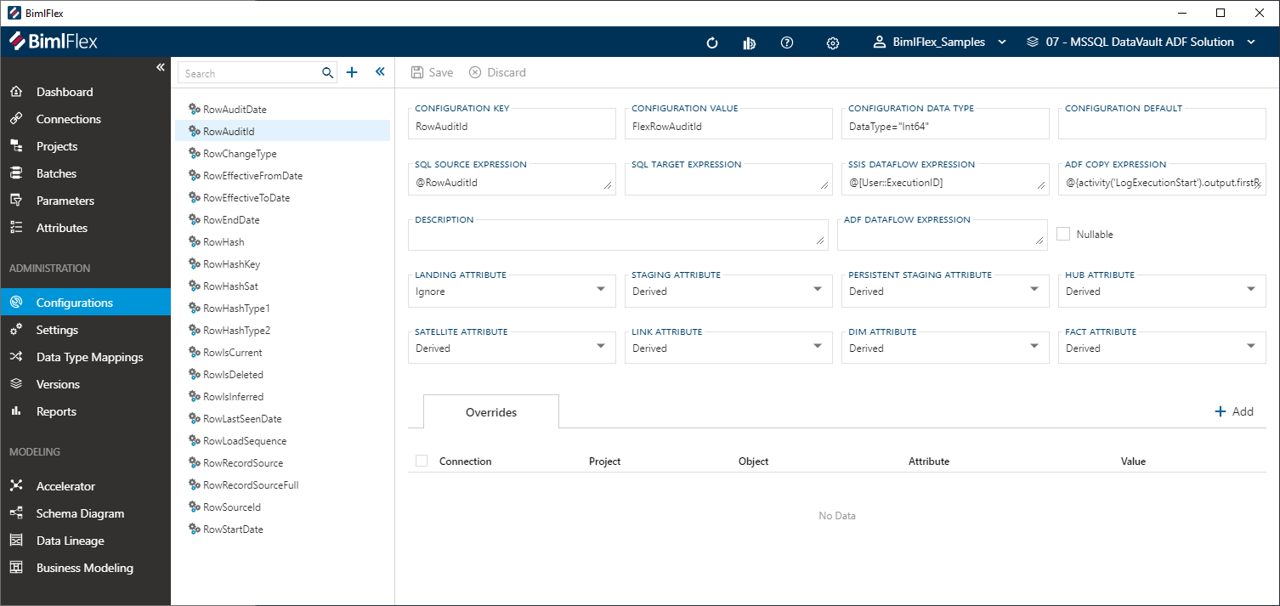

Out of the box, BimlFlex already provides a number of configurations already. These are found in the 'Configurations' screen in the BimlFlex App:

These standard configurations can be combined with the override framework so that they apply to certain projects, tables (objects), batches, or only to certain stages in the architecture. This standard way of defining data logistics behavior already covers most typical use-cases, but if additional customization is required Custom Configurations can be added as well.

A Custom Configuration works the same way as the standard configuration; it can be applied to certain scenarios using overrides and by definition itself. It is also possible for users to add their expression logic in the native syntax (e.g. SSIS, SQL, Mapping Data Flows). BimlFlex will interpret the configurations, and add these as columns in the data logistics process with the specified expression.

So, for example, if you want to add a column that performs a specific calculation, or captures runtime information, you can add a Custom Configuration and apply this to the area you want this column to be added to.

This is implemented in the screenshot below.

A configuration with the name of 'RandomNumber' is defined, with the Configuration Value of 'RandomValue'. It is configurated to apply only to Staging Area processes without further exceptions or overrides (it is 'derived' as a staging attribute). Because it is set as 'derived', the ADF DataFlow Expression 'random(10)' will be used.

Defining a Custom Configuration like this will result in the generation of an additional column called 'RandomValue' to the data set, and with a transformation defined as 'random(10)'. If the workflow is run, a random number value will be created and saved with the data.

This is a simple example, but having the ability to add native logic to a Custom Configurations allows for any degree of complexity that is required.

Configurations can be used to implement specific column-level logic, so that this can be taken into account when the data logistics processes are generated. Other similar features, that target other areas of the solution are settings and extension points.

Dev Diary - First look at a source-to-staging pattern in Mapping Data Flows

The 2021 BimlFlex release is being finalized, and will be available soon. This release contains a preview version of Mapping Data Flow patterns that can be used to generate this type of output from BimlFlex. The corresponding (and updated) BimlStudio will of course have the support for the full Mapping Data Flow Biml syntax.

The first pattern you will be likely to see is the loading of a data delta from a source into a Staging Area and an optional Persistent Staging Area (PSA). Whether a PSA is added is controlled by adding a PSA connection to the project that sources the data. So, adding a PSA connection will add a PSA to the process, unless overridden elsewhere.

Conceptually, the loading of data into a Staging Area and PSA are two separate data logistics processes. For Mapping Data Flows, these are combined into a single data flow object to limit the overhead and cost involved with starting up, and powering down, the Integration Runtime. Data is loaded once and then written multiple times from the same data flow. This approach is something we'll see in the Data Vault implementation as well.

This means that a source-to-staging loading process has one source, and one or two targets depending if the PSA is enabled. BimlFlex will combine these steps into a single Mapping Data Flow that looks like this:

This is by no means the only way to load data from a data source into the solution. Especially this layer requires various ways to load the data, simply because it can not always be controlled how the data is accessed or received. Potentially many limitations in applications, technology, process and organization apply - and this drives the need for different patterns to cater for different systems and / or scenarios. The staging layer is often the least homogenous in terms of approach for the data solution, and the full BimlFlex solution will see a variety of different patterns driven by settings and configurations.

If we consider this particular pattern, we can see that the PSA is used to check if data is already received. If no PSA is available, the data is just copied from the source into the (Delta Lake) Staging Area.

The validation whether data is already available is done using a Lookup transformation. The lookup compares the incoming record (key) against the most recent record in the PSA. If the incoming key does not exist yet, or it does exist but the checksums are different, then the record is loaded as data delta into the Staging Area and also committed to the PSA. 'Most recent' in this context is the order of arrival of data in the PSA.

At the moment the supported sources and targets for this process are Azure SQL databases and Delta Lake, but the Biml language now supports all connections that are provided by Azure Data Factory and Mapping Data Flows so more will be added over time.

However, using Delta Lake as an inline dataset introduces an interesting twist in that no SQL can be executed against the connection. This means that all logic has to be implemented as transformations inside the data flow. For now, the patterns are created with this in mind although this may change over time.

The most conspicuous consequence of this requirement is that the selection of the most recent PSA record is implemented using a combination of a Window Function and Filter transformation. The Window Function will perform a rank, and the Filter only allows the top rank (most recent) to continue.

Over the next few weeks we'll look into more of these patterns, why they work the way they do and how this is implemented using Biml and BimlFlex.

Dev Diary - Integration with the BimlCatalog

Every data solution benefits from a robust control framework for data logistics. One that manages if, how and when individual data logistics processes should be executed. A control framework also provides essential information to complete the audit trail that tracks how data is processed through the system and is ultimately made available to users.

Working with Biml via either BimlExpress or BimlStudio provides the language, compiler, and development environment to create your own data solution frameworks from scratch, which means the control framework must be defined also.

BimlFlex has the advantage that that the output that is generated is already integrated with the proprietary control framework that is provided as part of the solution: the BimlCatalog. The BimlCatalog is the repository that collects runtime information and manages the registration of individual data logistics processes. It is used to control the execution and provides operational reporting on the overall loading process such as run times and success / failure rates.

The BimlCatalog is available freely and can be integrated with your own custom solution – for example using BimlExpress. However, when using BimlFlex the integration of the patterns with the BimlCatalog is already available out of the box. This is the topic of today’s post.

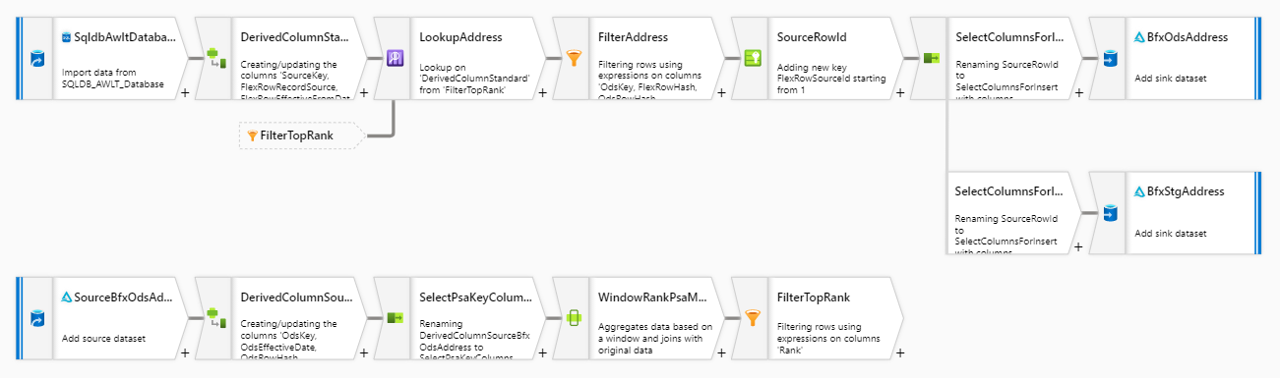

BimlCatalog integration for Mapping Data Flows

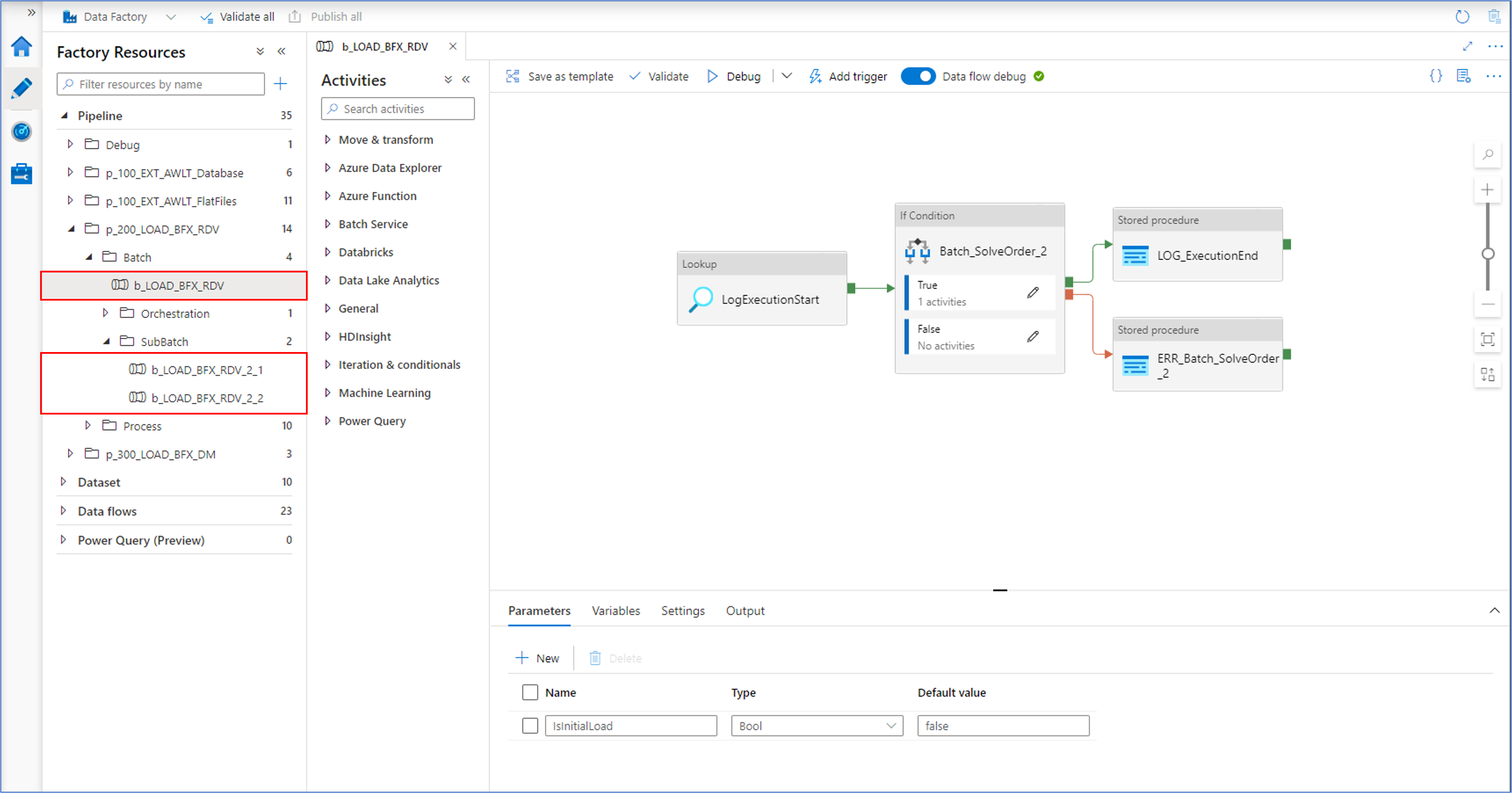

The BimlCatalog classifies data logistics as either an individual process or a batch. A batch is a functional unit of execution that calls one or more individual processes. Processes can either be run individually, or as part of the batch if one is defined. In Azure Data Factory, a batch is essentially an Execute Pipeline that calls other pipelines.

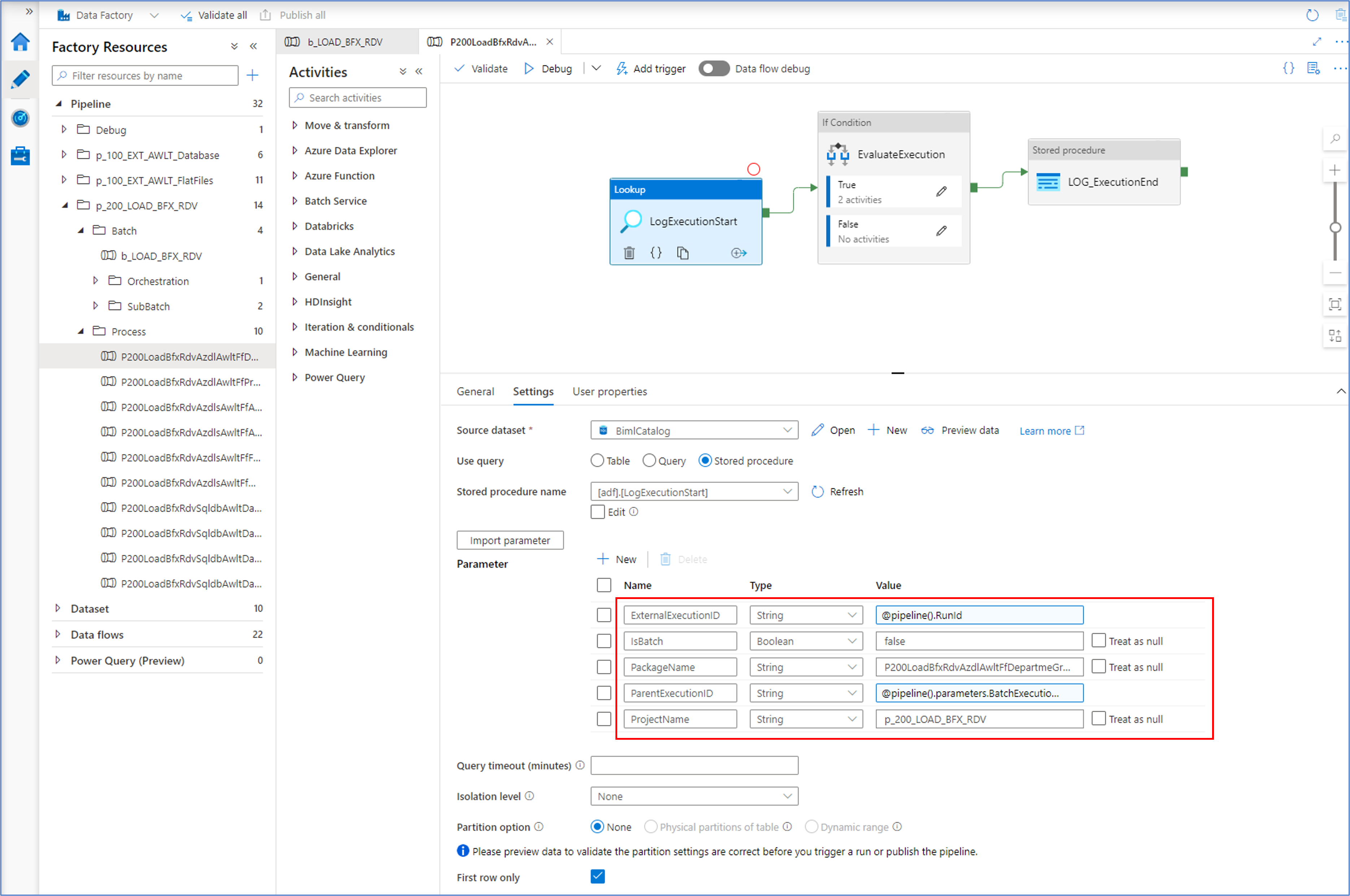

To inform the control framework of this, a flag that labels a process as a batch is passed to the stored procedure that is provided by the BimlCatalog. This procedure, the LogExecutionStart also accepts the execution GUID, the name of the process and the project it belongs to as input parameters.

The LogExecutionStart will run a series of evaluations to determine if a process is allowed to be run, and if so under what conditions. For example, an earlier failure may require a clean-up or it may be that the same process is already running. In this case, the control framework will wait and retry the start to allow the earlier process to complete gracefully. Another scenario is that already successfully completed processes that have been run as part of a batch may be skipped, because they already ran successfully but have to wait until the remaining processes in the batch are also completed without errors.

After all the processes are completed, a similar procedure (LogExecutionEnd) informs the framework that everything is OK.

A similar mechanism applies to each individual process, as is visible in the screenshot below.

In this example, the IsBatch flag is set to 'false' as this is not a process that calls other processes. Similarly, a parent execution ID is provided - the runtime execution ID of the (batch) process that calls this one.

In case something goes wrong

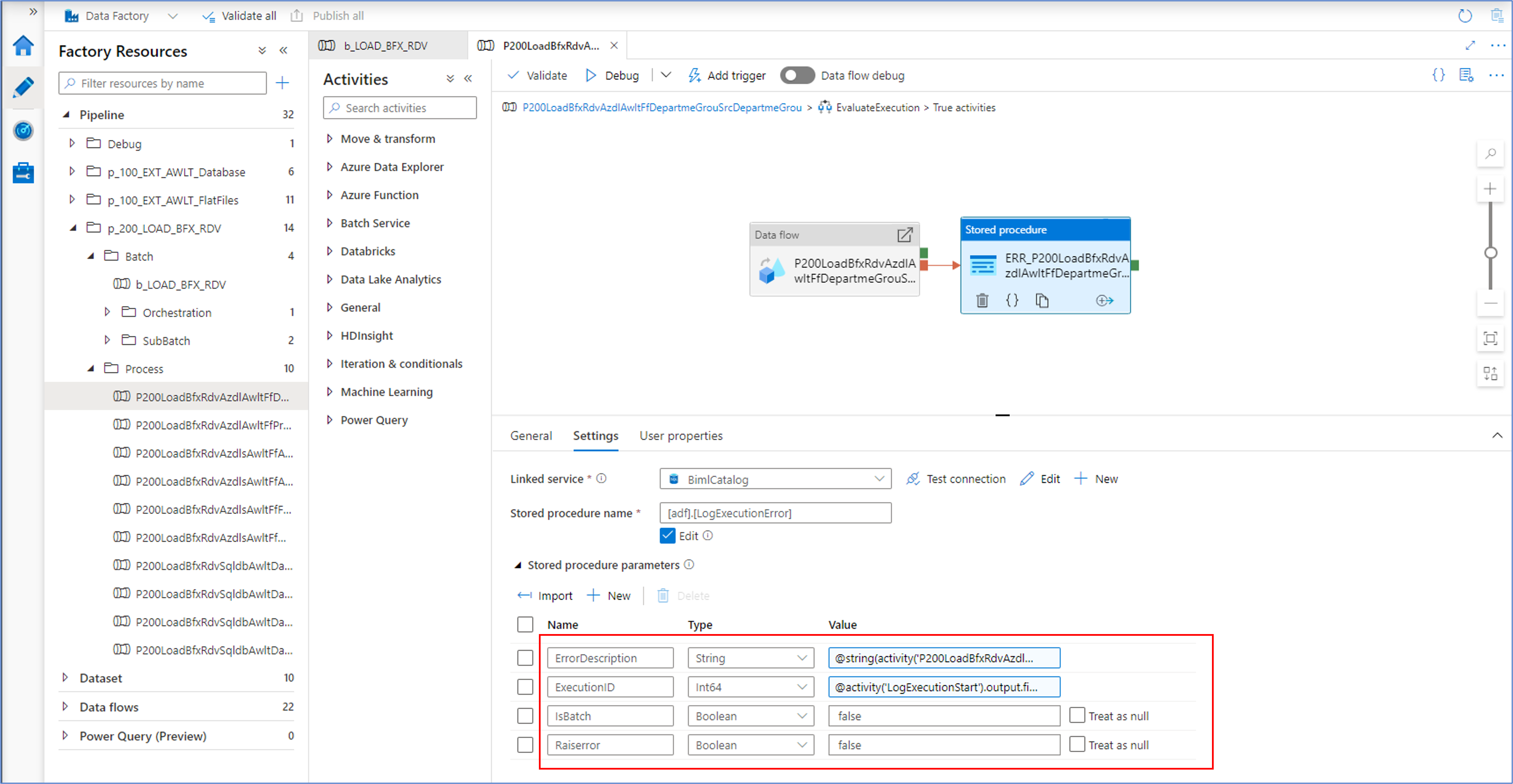

So far, the focus of the control framework integration has been on asserting a process can start and under what conditions. However, it is important to make sure that any failures are handled and reported. This is implemented at the level of each individual process.

Each individual execute pipeline that calls a Mapping Data Flow will report any errors encountered by calling the LogExecutionError procedure. Within the BimlCatalog, this will also cause the parent batch to fail and inform subsequent processes in the same batch to gracefully abort. The batch will preserve the integrity of the data for all processes it encapsulates.

Dev Diary – Orchestrating Mapping Data Flows

Earlier dev diary posts have focused on defining and deploying a Mapping Data Flow using Biml. For example, covering the Biml syntax and adding initial transformations and parameters.

Additional complexity will be added in upcoming posts to ultimately cover a fully functioning set of patterns.

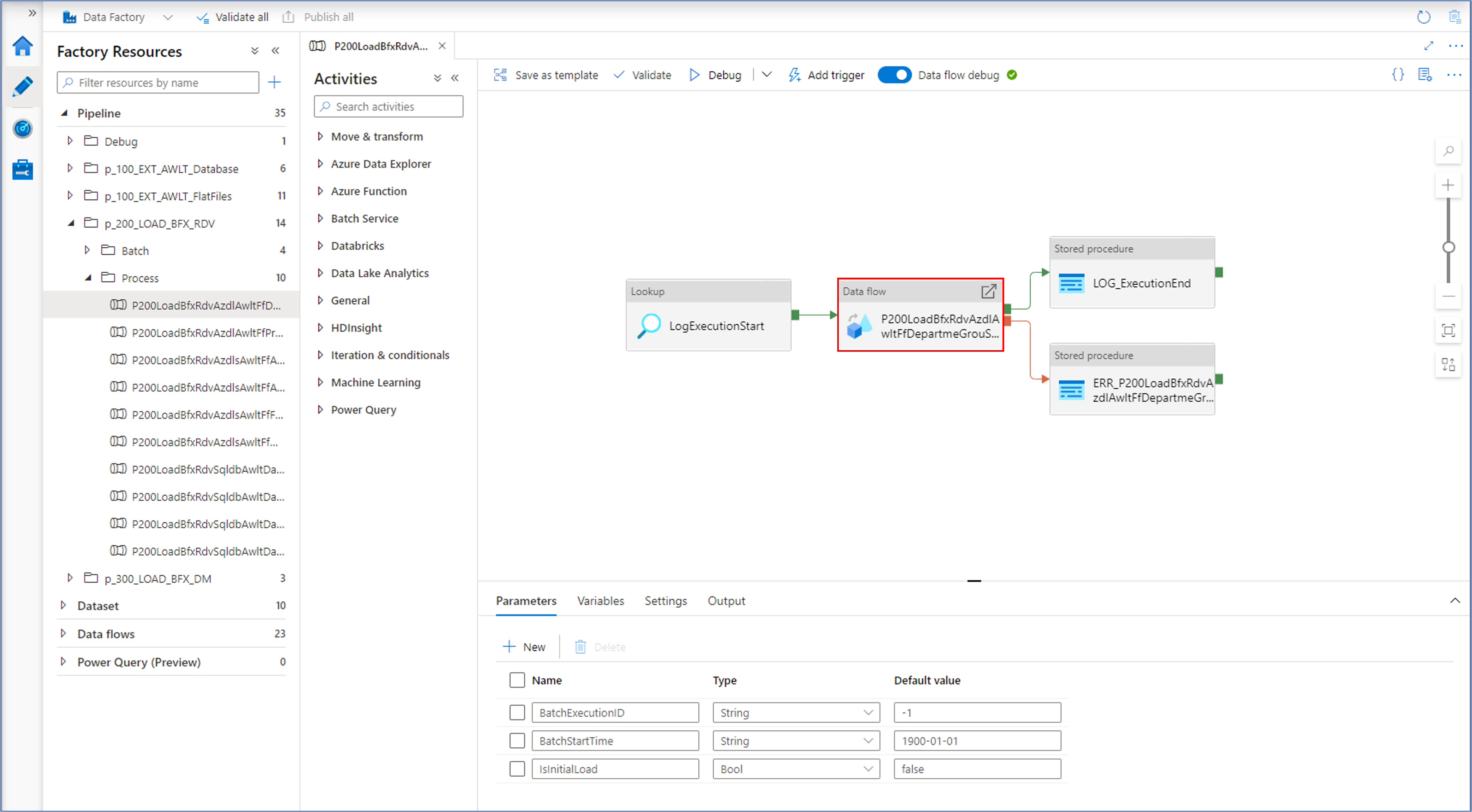

These Mapping Data Flows can be generated from BimlFlex by selecting the ‘Azure Data Flow’ Integration Template for a Project. The Project defines which data sets are in scope for a specific deliver or purpose, and the Integration Template directs the automation engine as to what output is required – Mapping Data Flows in this case.

The settings, overrides and configurations govern how the resulting output will be shaped and what features are included in the resulting Mapping Data Flow.

One of these features covers the orchestration.

In Azure, a Mapping Data Flow itself is not an object that can be executed directly. Instead, it needs to be called from an Execute Pipeline. This pipeline can be run, and in turn it will start the data flow.

This means that a corresponding pipeline will need to be created as part of any Mapping Data Flow, and this is exactly what BimlFlex does out of the box. In addition to this, BimlFlex can use this pipeline to integrate the data logistics into the control framework – the BimlCatalog database. This database captures the runtime details on process execution, so that there is a record of each execution result as well as statistics on volumes processed and more.

An example of the generated output is shown in the screenshot below:

The BimlCatalog will also issue a unique execution runtime point that is passed down from the pipeline to the Mapping Data Flow where it can be added to the data set. This means that a full audit trail can be established; it is always known which process was responsible for any data results throughout the solution.

But BimlFlex will generate more than just the pipeline wrapper for the Mapping Data Flow and integration into the control framework. It will also generate a batch structure in case large amounts of related processes must be executed at the same time – either sequentially or in parallel.

To manage potentially large numbers of pipelines and Mapping Data Flows, BimlFlex automatically generates a ‘sub-batch’ middle layer to manage any limitations in Azure. For example, at the time of writing Azure does not allow more than 40 objects to be defined as part of a single pipeline.

BimlFlex will generate as many sub-batches as necessary to manage limits such as these, and each process will start the next one based on the degree of parallelism defined in metadata.

The next post will cover how to start generating this using Biml.

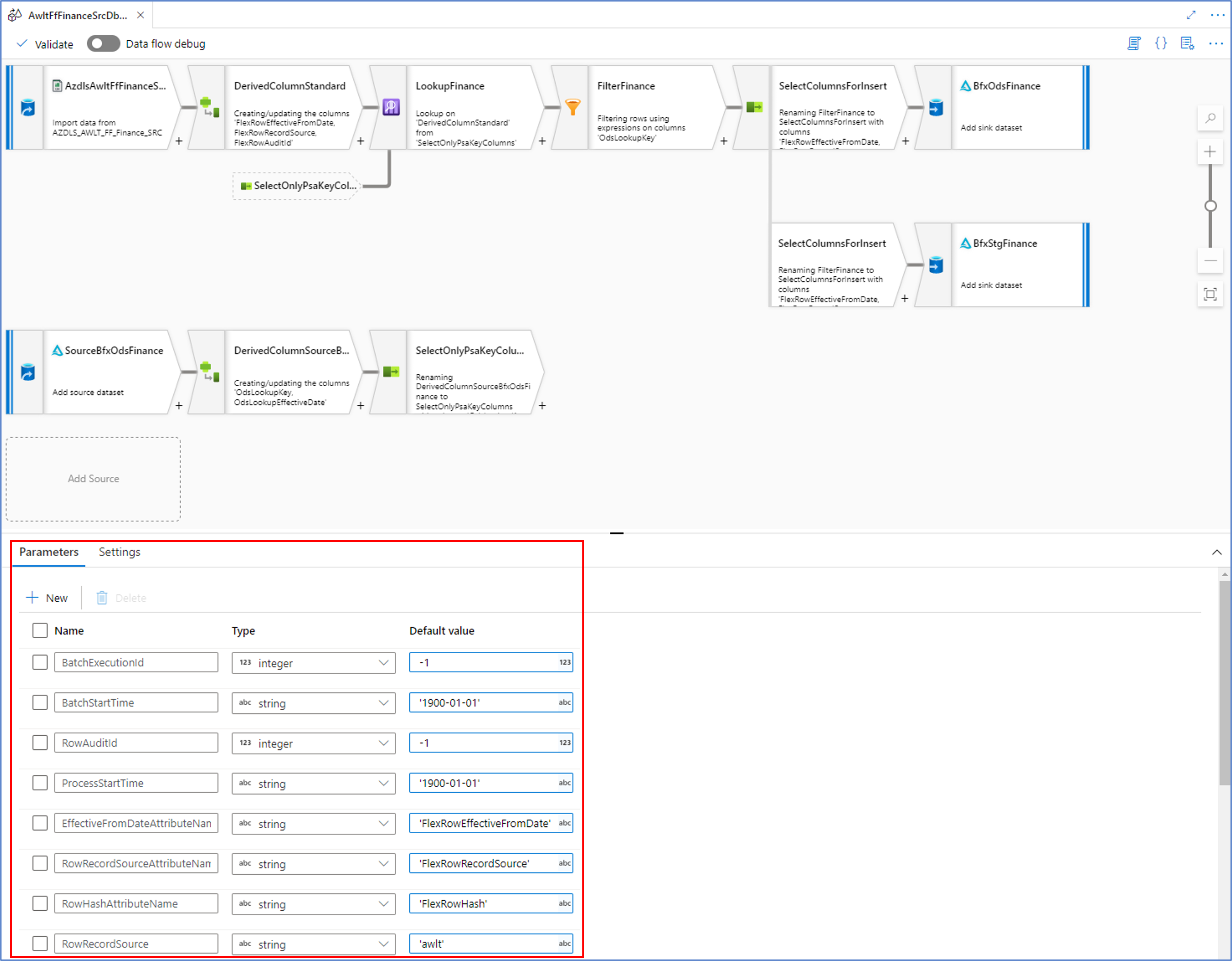

Dev Diary – Defining Mapping Data Flow Parameters with Biml

The previous post on developing Delta Lake using Azure Data Factory Mapping Data Flows covered how and why we use parameters in our BimlFlex-generated Mapping Data Flow data logistics processes.

As a brief recap, parameters for a Mapping Data Flow can both inherit values from the process that executes it (the Execute Pipeline) as well as hold values that can be used to define the data flow itself.

For BimlFlex this means the Mapping Data Flows can integrate with the BimlCatalog, the data logistics control framework, and provide a central access to important information received from the metadata framework. For example, parameters contain the composition of the Integration Key and the names of standard fields as they have been configured in the BimlFlex App.

Adding Parameters using Biml

The code snippet below uses the example from the previous post on this topic and adds a number of parameters to the Mapping Data Flow. Once defined, these become accessible to the Execute Pipeline that calls the Mapping Data Flow so that information can be passed on.

The first four parameters of this example reflect this behaviour. The start time of the individual process and its batch (if there is one) are passed to the Mapping Data Flow so that these values can be added to the resulting data set.

The other parameters are examples of attributing both the name of custom columns as well as their default values. This is really useful to dynamically add columns to your data flow.

This approach allows any custom or standard configuration to make its way to the generated data logistics process.

As a final thing to note, some parameters are defined as an array of strings by using the string[] data type. Mapping Data Flows has a variety of functionality that allows easy use of an array of values. This is especially helpful when working with areas where combinations of values are required, such as for instance calculation of full row hash values / checksums or managing composite keys. Future posts will go into this concept in more detail.

For now, let's have a look at the syntax for defining parameters in Mapping Data Flows - using the Parameters segment.

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<DataFactory Name="bfx-dev-deltalake-demo">

<Dataflows>

<MappingDataflow Name="HelloWorld">

<Sources>

<InlineAzureSqlDataset

Name="HelloWorldExampleSource"

LinkedServiceName="ExampleSourceLS"

AllowSchemaDrift="false"

ValidateSchema="false" />

</Sources>

<Sinks>

<InlineAzureSqlDataset

Name="HelloWorldExampleTarget"

LinkedServiceName="ExampleTargetLS"

SkipDuplicateInputColumns="true"

SkipDuplicateOutputColumns="false"

InputStreamName="bla.Output" />

</Sinks>

<Parameters>

<Parameter Name="BatchExecutionId" DataType="integer">-1</Parameter>

<Parameter Name="BatchStartTime" DataType="string">'1900-01-01'</Parameter>

<Parameter Name="RowAuditId" DataType="integer">-1</Parameter>

<Parameter Name="ProcessStartTime" DataType="string">'1900-01-01'</Parameter>

<Parameter Name="EffectiveFromDateAttributeName" DataType="string">'FlexRowEffectiveFromDate'</Parameter>

<Parameter Name="RowRecordSourceAttributeName" DataType="string">'FlexRowRecordSource'</Parameter>

<Parameter Name="RowHashAttributeName" DataType="string">'FlexRowHash'</Parameter>

<Parameter Name="RowSandingValue" DataType="string">'~'</Parameter>

<Parameter Name="RowSourceIdAttributeName" DataType="string">'FlexRowSourceId'</Parameter>

<Parameter Name="RowRecordSource" DataType="string">'awlt'</Parameter>

<Parameter Name="SourceKeyAccount" DataType="string[]">['AccountCodeAlternateKey']</Parameter>

<Parameter Name="SourceColumnsAccount" DataType="string[]">['AccountDescription','AccountType','Operator','CustomMembers','ValueType','CustomMemberOptions']</Parameter>

<Parameter Name="SchemaNameSource" DataType="string">'dbo'</Parameter>

<Parameter Name="TableNameSource" DataType="string">'Account'</Parameter>

</Parameters>

</MappingDataflow>

</Dataflows>

<LinkedServices>

<AzureSqlDatabase Name="ExampleSourceLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

<AzureSqlDatabase Name="ExampleTargetLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

</LinkedServices>

</DataFactory>

</DataFactories>

</Biml>

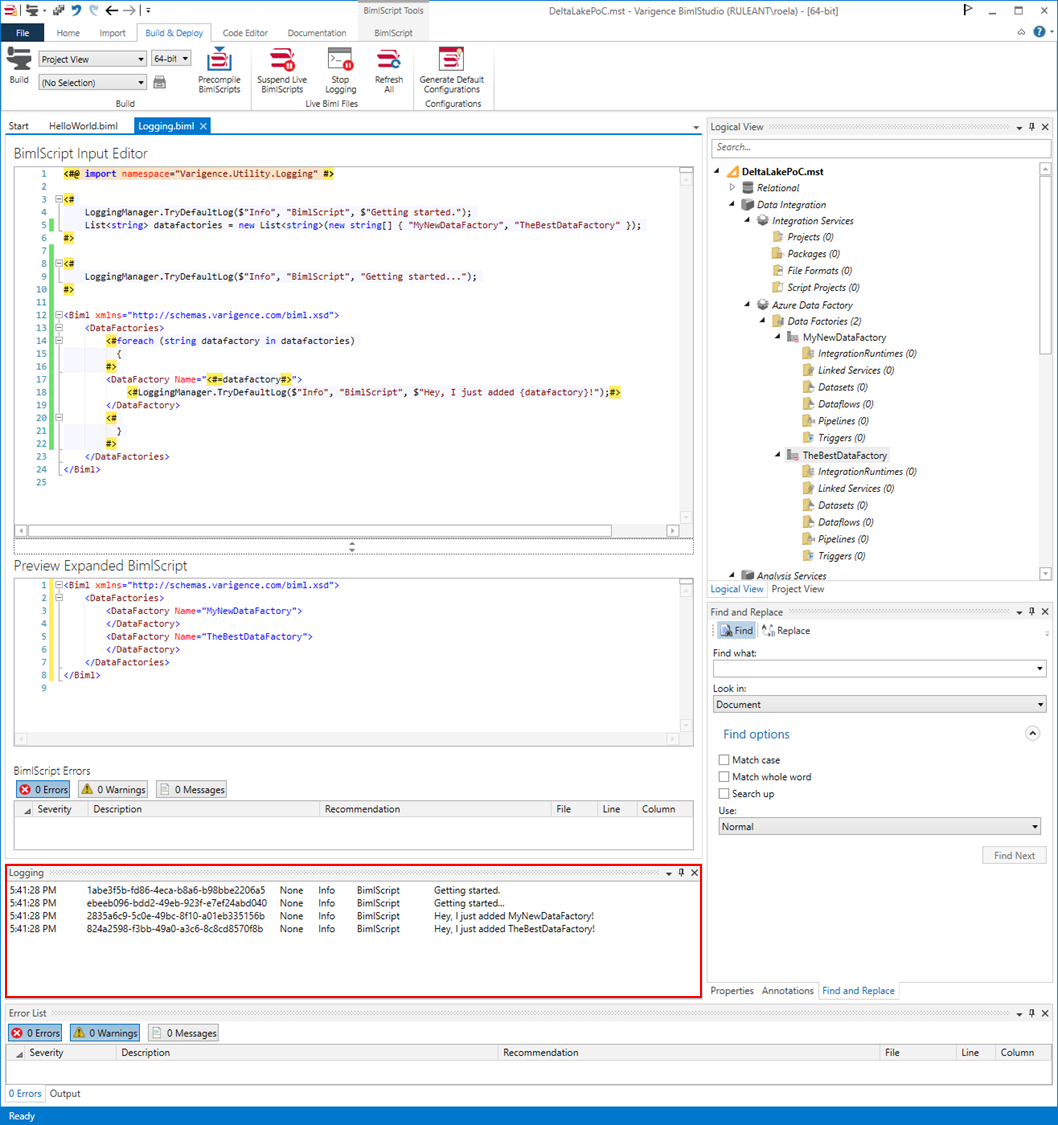

Using built-in logging for Biml

When working with Biml in any situation, be it using BimlExpress, BimlStudio or BimlFlex, it can be helpful to peek into what is happening in the background.

Some of you would have developed your own metadata framework that provides information to generate Biml, created a solution in BimlStudio or added extensions for BimlFlex. In all cases, it is possible to add logging to your solution and this is already natively available in BimlScript.

There is no need to create your own logging framework if the Biml logging already meets your needs, and it covers most common scenarios. The standard logging can write to a text buffer, an object such as a list, a file or it can trigger an event.

Working with logging is covered in detail in The Biml Book, and it is worth having a look especially if you are using BimlExpress.

But it is also possible to add logging to BimlStudio right away with just a few lines of code, with the immediate benefit that the log messages appear in the logging pane.

Make sure you enable the logging function in BimlStudio for this. This can be found in the ‘Build & Deploy’ menu.

To get started, add the Varigence Utility Logging reference to you script, and give the below code a try. This simple example creates a List of string values that are meant to be used to generate DataFactory Biml.

For each iteration, a log is written to the logging pane.

<#@ import namespace="Varigence.Utility.Logging" #>

<#

LoggingManager.TryDefaultLog($"Info", "BimlScript", $"Getting started.");

List<string> datafactories = new List<string>(new string[] { "MyNewDataFactory", "TheBestDataFactory" });

LoggingManager.TryDefaultLog($"Info", "BimlScript", "Getting started...");

#>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<#

foreach (string datafactory in datafactories)

{

#>

<DataFactory Name="<#=datafactory#>">

<#LoggingManager.TryDefaultLog($"Info", "BimlScript", $"Hey, I just added {datafactory}!");#>

</DataFactory>

<#

}

#>

</DataFactories>

</Biml>

The result looks like the screenshot below. Happy logging!

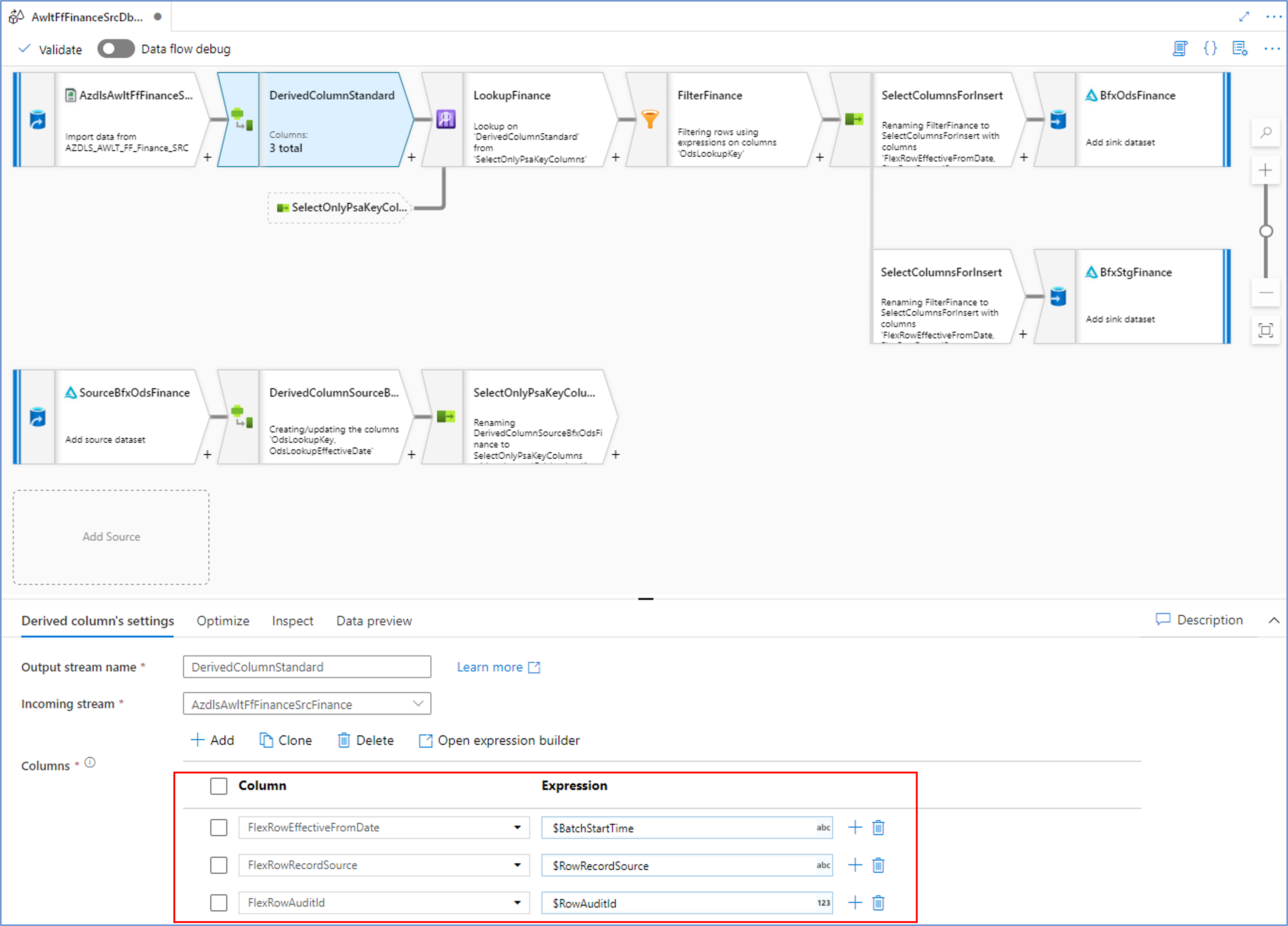

Dev Diary - Using Mapping Data Flow Parameters to dynamically use BimlFlex metadata

When using Mapping Data Flows for Azure Data Factory with inline datasets, the schema can be evaluated at runtime. This also paves the way to use of one of the key features this approach enables, which is support for schema drift.

Schema drift allows to evaluate or infer changes made to the schema (data structure) that is read from or written to. Without going into too much detail on this for now, the key message is that the schema is not required to be known in advance and can even change over time.

This needs to be considered for the new Data Flow Mapping patterns for BimlFlex. The Data Flow Mappings may read from multiple sources of different technology and write to an equal variety of targets (sinks), and some have stronger support for defining the schema than others.

Moreover, various components that may be used in the Data Flow Mapping require a known or defined column to function. This is to say that a column needs to be available to use in many transformations; in many cases this is mandatory.

The approach we are pioneering with BimlFlex is to make sure the generated patterns / Data Flow Mappings are made sufficiently dynamic by design by using Data Flow Mapping Parameters, so that we don't need to strictly define the schema upfront. The BimlFlex generated output for Data Flow Mappings intends to strike a balance between creating fully dynamic patterns that receive their metadata from BimlFlex, and allowing injecting custom logic using Extension Points.

Using Data Flow Mapping Parameters goes a long way in making this possible.

Using parameters, various essential columns can be predefined both in terms of name as well as value. This information can be used in the Data Flow Mapping using the dynamic content features provided.

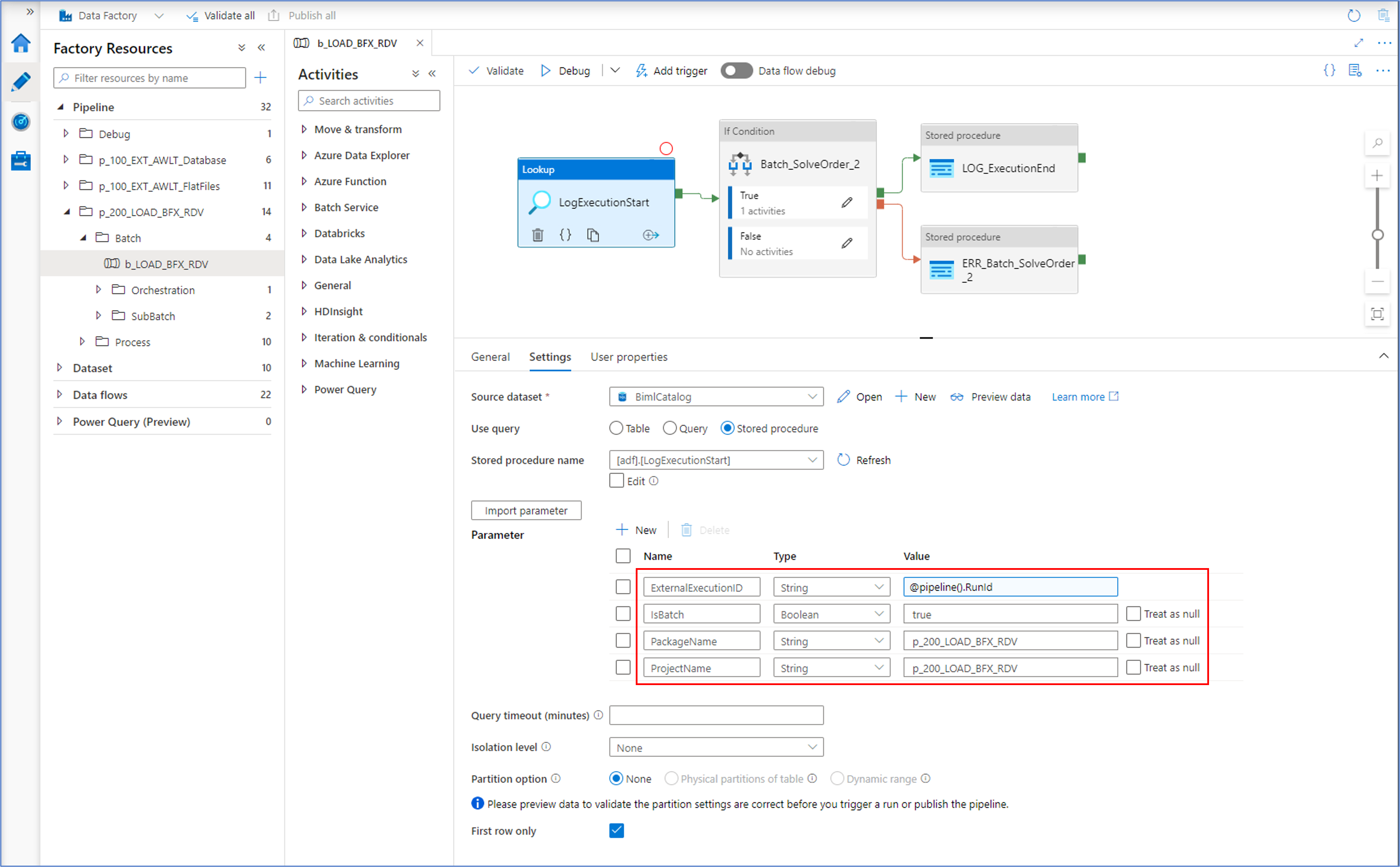

Consider the following screenshot of one of the generated output that loads new data form file into a Delta Lake staging area:

In our WIP approach, BimlFlex generates the essential metadata as parameters which are visible by clicking anywhere on the Data Flow Mapping canvas outside of the visible components.

As an example, the ‘RowRecordSource’ parameter contains the name of the Record Source value from the BimlFlex metadata. This can be used directly in transformation such as in the Derived Column transformation below:

This means that we can use the names of the necessary columns as placeholders in subsequent transformations and their value will be evaluated at runtime. This can also be used to dynamically set column names, if required.

In the next posts, we’ll look at the Biml syntax behind this approach for the various transformations / components used.

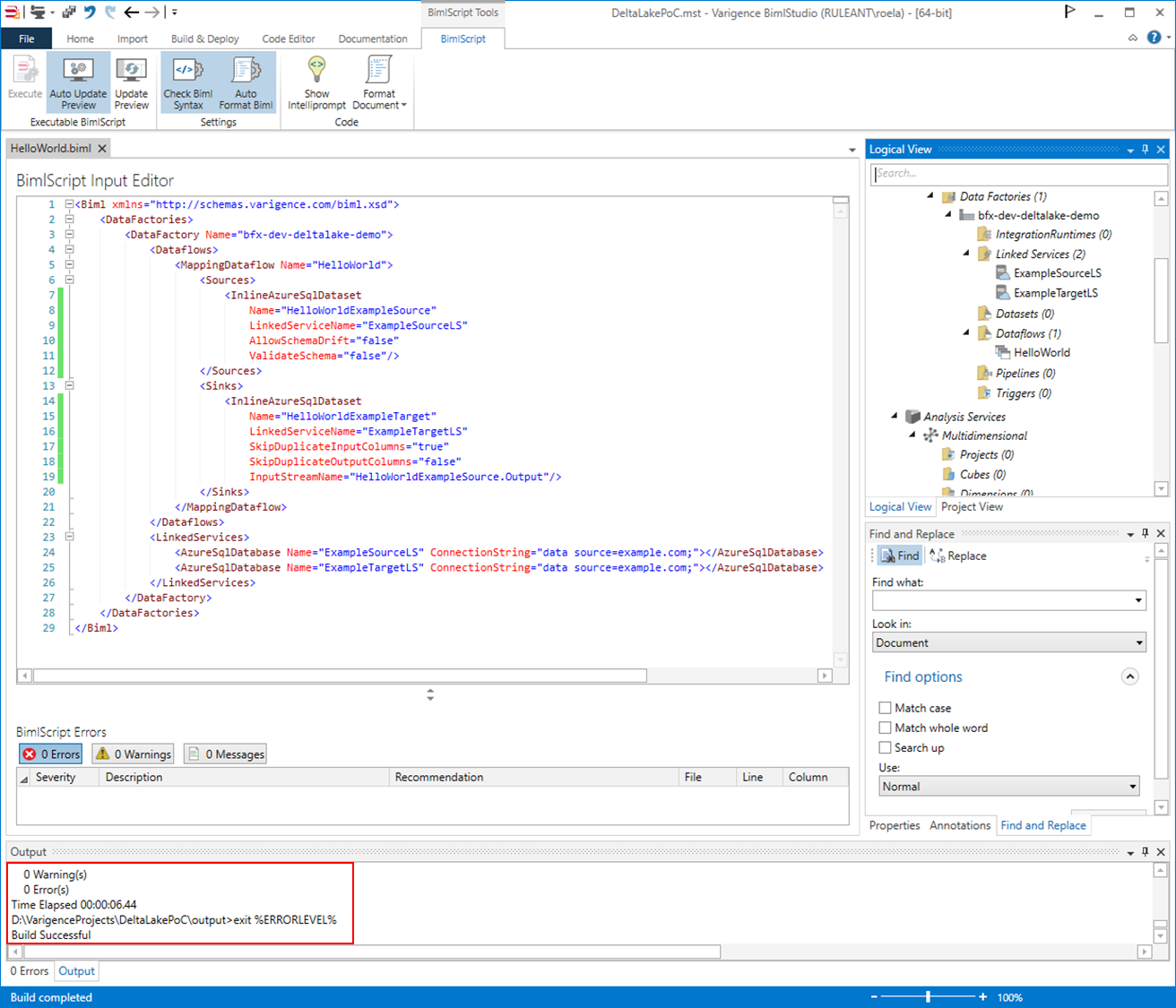

Dev Diary - Deploying Biml-Generated ADF Data Flow Mappings

In the previous post on defining Data Flow Mappings for Azure Data Factory using Biml, we looked at a simple Biml script to define a Data Flow Mapping.

You may have noticed that the solution was 'built' in BimlStudio, at the bottom of the screenshot.

The build process has successfully created the necessary Azure Data Factory artefacts to create the intended Data Flow Mapping on Azure. BimlStudio has placed these in the designated output directory.

Biml and BimlStudio have the capability to generate various artefacts including Data Definition Language (DDL) files but it is the Azure Resource Manager (ARM) templates that we are interested in for now.

BimlStudio creates an arm_template.json file and a corresponding arm_template_parameters.json file that contain everything needed to deploy the solution to Azure. For large solutions, the files will be split into multiple smaller related files to ensure deployments without errors that may happen due to technical (size) limitations. This is done automatically as part of the build process.

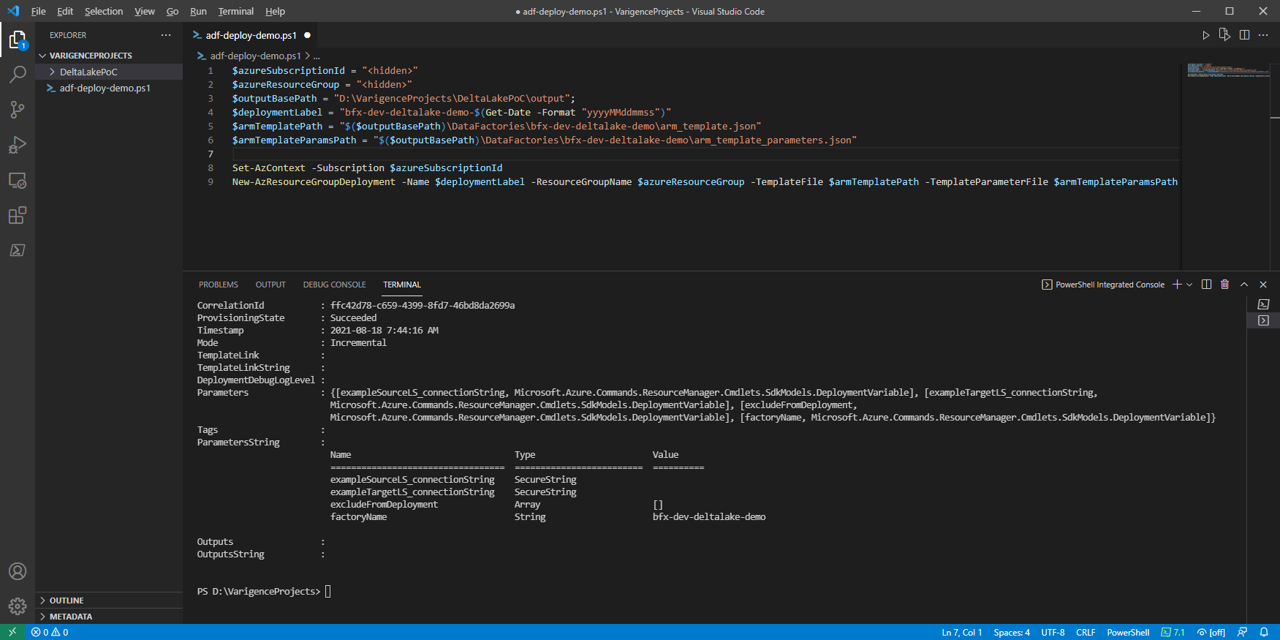

Deployment of the ARM templates can be done manually via the Azure portal, but a convenient Powershell script can also be created using the New-AzResourceGroupDeployment cmdlet.

As an example, the following structure can be used using this approach:

$azureSubscriptionId = "<subscription id>" $azureResourceGroup = "<resource group id>" $outputBasePath = "D:\VarigenceProjects\DeltaLakePoC\output"; $deploymentLabel = "bfx-dev-deltalake-demo-$(Get-Date -Format "yyyyMMddmmss")" $armTemplatePath = "$($outputBasePath)\DataFactories\bfx-dev-deltalake-demo\arm_template.json" $armTemplateParamsPath = "$($outputBasePath)\DataFactories\bfx-dev-deltalake-demo\arm_template_parameters.json" Set-AzContext -Subscription $azureSubscriptionId New-AzResourceGroupDeployment -Name $deploymentLabel -ResourceGroupName $azureResourceGroup -TemplateFile $armTemplatePath -TemplateParameterFile $armTemplateParamsPath

Scripts such as these are automatically created using BimlFlex-integrated solutions, because the necessary information is already available here. In this example, the script has been updated manually.

When updated, the script can be run to deploy the solution to Azure. For example using Visual Studio Code:

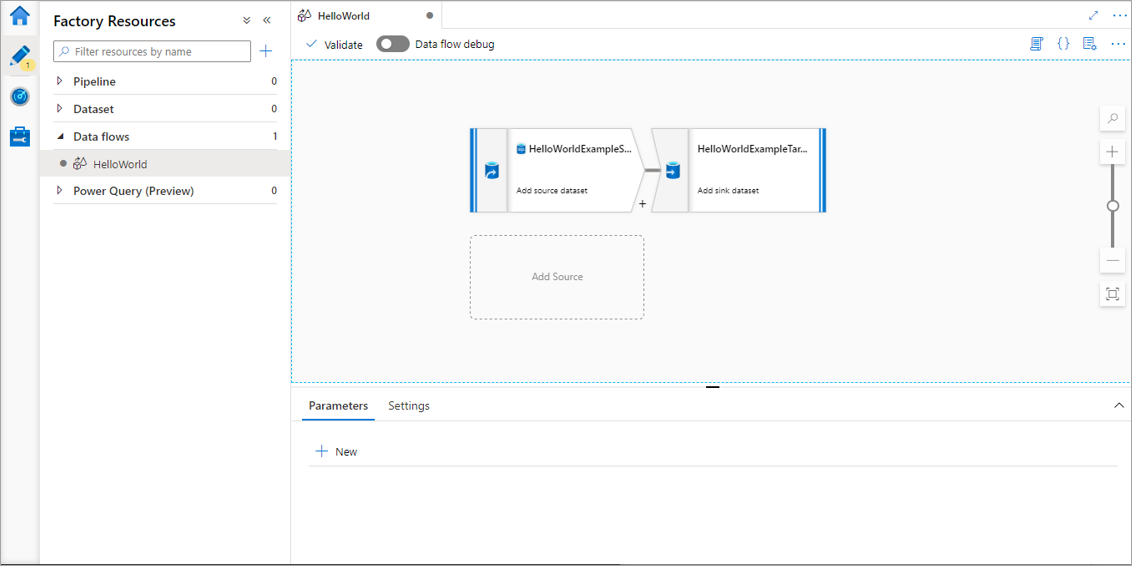

With the expected result in the target Data Factory:

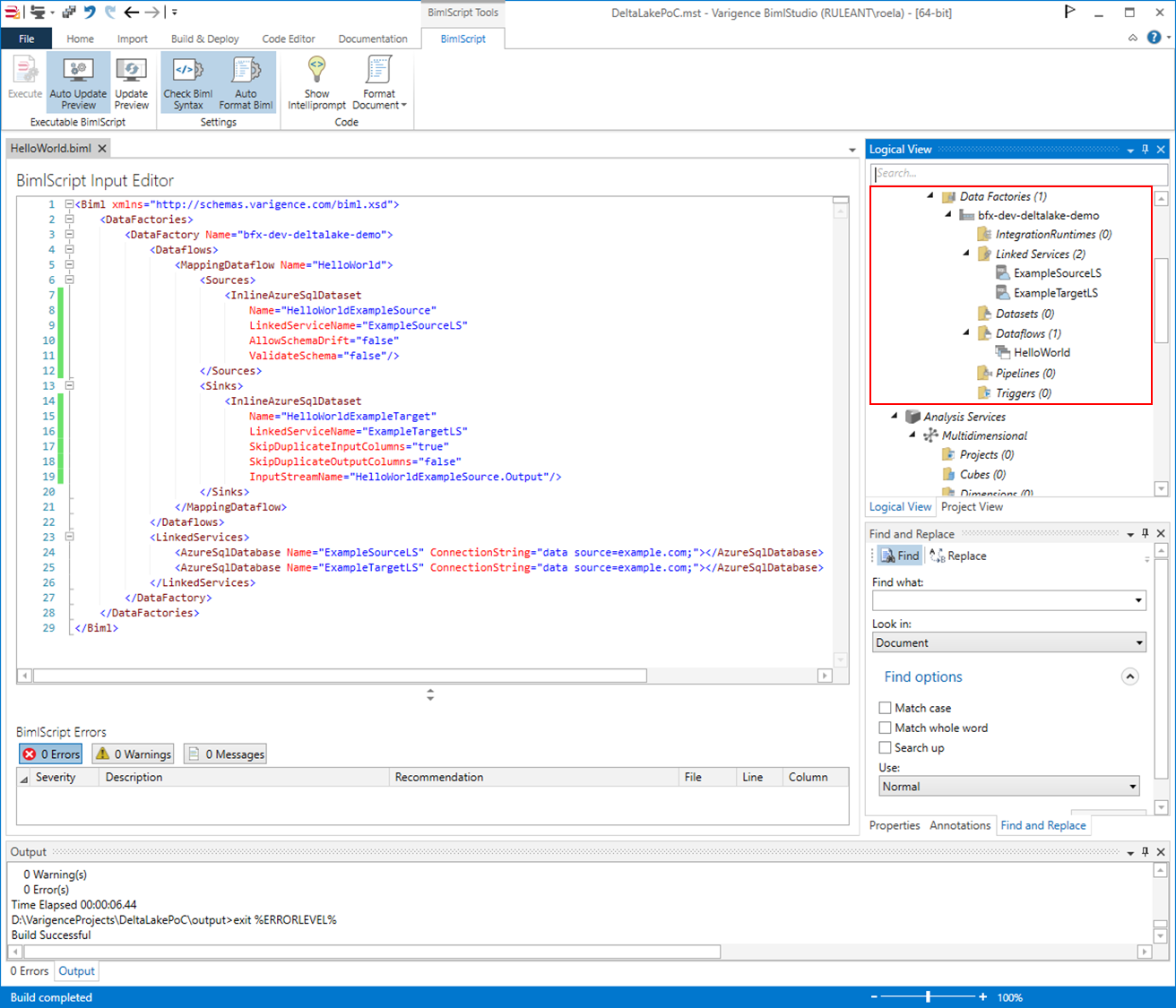

Dev Diary - Generating ADF Data Flow Mapping using Biml

The work to generate Data Flow Mappings in Azure Data Factory using the BimlFlex automation platform is nearing completion. While there is still more to do, there are also a lot of topics that are worth sharing ahead of this release.

The BimlFlex solution, as a collection of designs, settings, configurations and customizations is provided as Biml patterns that can be accessed from BimlStudio. This means that a dynamic preview of the expected (generated) output is visualized in BimlStudio, along with the supporting Biml code.

At this stage, and if required, BimlStudio allows for further customizations using Extension Points, which support a combination of Biml script, SQL or .Net code. These will become part of the data logistics solution that will be deployed.

The build process in BimlStudio will ‘compile’ the Biml code into native artefacts for the target platform and approach. In the case of this development diary, this will be as Data Flow Mappings for Azure Data Factory.

Using Data Flow Mappings has certain advantages (and disadvantages) compared to moving and transforming data using other Azure Data Factory components such as Copy Activities. Much of the remaining work to complete this feature is about finding the best mix between the available techniques, so that these can be generated from the design metadata.

One of the advantages of using Data Flow Mappings, aside from the visual representation of the data logistics, is the ability to use inline Sources and Sinks (targets). Inline datasets allow direct access to many types of data sources without a dedicated connector object (dataset). They are especially useful when the underlying structure may evolve. Also, and especially in data lake scenarios, they offer a way to manage where the compute takes place without requiring additional compute clusters.

It is an easy and fast way to use a variety of technologies.

In this post, and the subsequent posts as well, we will use this approach as a way of explaining working with Data Flow Mappings in BimlFlex.

Biml Data Flow Mapping syntax

Because the design metadata is provided as Biml script, it makes sense to start explaining the BimlFlex Data Flow Mapping support in BimlStudio because this is the best way to work with the Biml language.

Defining a Data Flow Mapping using Biml is easy. The various features, components and properties available in Data Flow Mappings are supported as Biml XML tags.

A Data Flow Mapping is referred to by using the MappingDataFlow segment. It is part of the Dataflows, which is in turn part of a DataFactory.

Consider the example below:

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<DataFactories>

<DataFactory Name="bfx-dev-deltalake-demo">

<Dataflows>

<MappingDataflow Name="HelloWorld">

<Sources>

<InlineAzureSqlDataset

Name="HelloWorldExampleSource"

LinkedServiceName="ExampleSourceLS"

AllowSchemaDrift="false"

ValidateSchema="false"/>

</Sources>

<Sinks>

<InlineAzureSqlDataset

Name="HelloWorldExampleTarget"

LinkedServiceName="ExampleTargetLS"

SkipDuplicateInputColumns="true"

SkipDuplicateOutputColumns="false"

InputStreamName="HelloWorldExampleSource.Output"/>

</Sinks>

</MappingDataflow>

</Dataflows>

<LinkedServices>

<AzureSqlDatabase Name="ExampleSourceLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

<AzureSqlDatabase Name="ExampleTargetLS" ConnectionString="data source=example.com;"></AzureSqlDatabase>

</LinkedServices>

</DataFactory>

</DataFactories>

In this ‘Hello World’ example, a single Data Flow Mapping is created with a single Source and a single Sink. Both the Source and the Sink are defined as inline datasets, which are specific options in Biml to distinguish them from regular datasets.

Inline datasets require a connection (Linked Service) to be assigned, and for a valid result this Linked Service must also be available.

When using BimlStudio to interpret this snippet, these components will be visible in the Logical View:

This skeleton code shows how Biml supports Data Flow Mappings, and inline data sources in particular. In the next post, we will expand this into a larger pattern and then look into how BimlFlex configurations influence this output.

Delta Lake on Azure work in progress – introduction

At Varigence, we work hard to keep up with the latest in technologies so that our customers can apply these for their data solutions, either by reusing their existing designs (metadata) and deploying this in new and different ways or when starting new projects.

A major development focus recently has been to support Delta Lake for our BimlFlex Azure Data Factory patterns.

What is Delta Lake, and why should I care?

Delta Lake is an open-source storage layer that can be used ‘on top off’ Azure Data Lake Gen2, where it provides transaction control (Atomicity, Consistency, Isolation and Durability, or 'ACID') features to the data lake.

This supports a 'Lake House' style architecture, which is gaining traction because it offers opportunities to work with various kinds of data in a single environment. For example, combining semi- and unstructured data or batch- and streaming processing. This means various use-cases can be supported by a single infrastructure.

Microsoft has made Delta Lake connectors available for Azure Data Factory (ADF) pipelines and Mapping Data Flows (or simply ‘Data Flows’). Using these connectors, you can use your data lake to 'act' as a typical relational database for delivering your target models while at the same time use the lake for other use-cases.

Even better, using Data Flows can use integration runtimes for the compute without requiring a separate cluster that hosts Delta Lake. This is the inline feature and makes it possible to configure an Azure Storage Account (Azure Data Lake Gen2) and use the Delta Lake features without any further configuration.

Every time you run a one or more Data Flows, Azure will spin up a cluster for use by the integration runtime in the background.

How can I get started?

Creating a new Delta Lake is easy. There are various ways to implement this, which will be covered in subsequent posts.

For now, the quickest way to have a look at these features is to create a new Azure Storage Account resource of type Azure Data Lake Storage Gen2. For this resource, the ‘Hierarchical Namespace’ option must be enabled. This will enable directory trees/hierarchies on the data lake – a folder structure in other words.

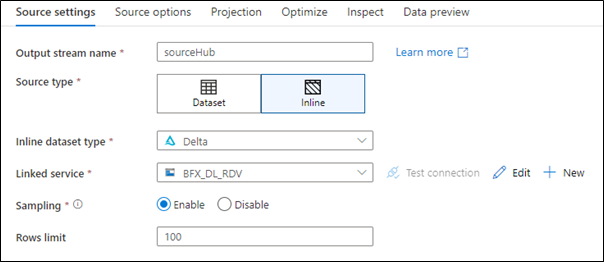

Next, in Azure Data Factory, a Linked Service must be created that connects to the Azure Storage Account.

When creating a new Data Flow in Azure Data Factory, this new connection can be used as an inline data source with the type of ‘Delta’.

For example, as shown in the screenshot below:

This is all that is needed to begin working with data on Delta Lake.

What is Varigence working on now?

The Varigence team has updated the BimlFlex App, its code generation patterns and BimlStudio to facilitate these features. Work is underway to complete the templates and make sure everything works as expected.

In the next post we will investigate how these concepts translate into working with BimlFlex to automate solutions that read from, or write to, a Delta Lake.

Important BimlFlex documentation updates

Having up-to-date documentation is something we work on every day, and recently we have made significant updates in our BimlFlex documentation site.

Our reference documentation will always be up to date, because this is automatically generated from our framework design. Various additional concepts have been explained and added.

For example, a more complete guide to using Extension Points has been added. Extension Points are an important feature to allow our customers to both use the out-of-the-box framework as well as making all kinds of customizations as needed for any specific needs that may arise.

Have a look at the following topics:

- Extensions Points, also with links to updated reference documentation on each Extension Point.

- Delivering an Azure Data Factory solution using BimlFlex.

- An overview of the key BimlFlex mechanisms.

- BimlFlex Settings and Overrides.

Introducing the BimlFlex Community Github Repository

The BimlCatalog Github is now BimlFlex Community

We have rebranded the 'BimlCatalog' public open source repository on Github to BimlFlex Community.

By doing this we hope this provides easier access to some of our code, solutions and scripts that complement working with BimlFlex for our network of BimlFlex practitioners.

Examples of this are common queries on the BimlCatalog database, which contains the runtime (data logistics) information. Another example is the Power BI model that can be used to visualise some of this runtime information.

The BimlFlex App also provides similar reporting capability, but by providing a public repository we believe this makes it easier to add and customize solutions that complement the BimlFlex platform for data solution automation.

The Fastest Snowflake Data Vault

Overview

We sponsored WWDVC along with Snowflake this year, and Kent Graciano from Snowflake did an excellent presentation on using multiple compute warehouses to load your Data Vault in parallel.

He also mentioned that instead of using hash keys, you could use the business key for integration and Dan Linstedt confirmed that this is an approved approach. However, they were both quick to point out that you should verify if this approach is right for you. Before Kent's presentation, he said to me, or more correctly challenge the vendors to see who can add support for this.

Snowflake Parallel Automation

Well, challenge accepted, and we are now the only vendor that offers Snowflake Data Vault automation taking advantage of their unique features. In the webcast, we demonstrate how you can switch between HashKey and BusinessKey implementations and also configure multiple Snowflake compute warehouses for extreme parallel loading.

Watch the Webinar

See it in action